Series

Get Started with NEON Data: A Series of Data Tutorials

This Data Tutorial Series is designed to provide you with an introduction to how to access and use NEON data. It includes both foundational skills for working with NEON data, and tutorials that focus on specific data types, which you can choose from based on your interests.

Foundational Skills and Tools to Access NEON Data

- Start with a short video guide to downloading data from the NEON Data Portal.

- The Download and Explore NEON Data tutorial will guide you through using the neonUtilities package in R and/or the neonutilities package in Python, to download and transform NEON data and to use the metadata that accompany data downloads to help you understand the data.

- Many more details about the neonUtilities package, and the input parameters available for its functions, are available in the neonUtilities cheat sheet.

- Using an API token can make your downloads faster, and helps NEON by linking your user account to your downloads. See more information about API tokens here, and learn how to use a token with neonUtilities in this tutorial.

- NEON data are initially published as Provisional and are subject to change; each year NEON publishes a data Release that does not change and can be cited by DOIs. Learn about working with Releases and Provisional data.

- Release availability and general data management principles are a little different for remote sensing data than sensor and observational data; learn about best practices for AOP data management and Releases.

- Learn how to work with NEON location data, using examples from vegetation structure observations and soil temperature sensors.

Introductions to Working with Different Data Types

- Explore the intersection of sensor and observational data with the Plant Phenology & Temperature tutorial series (individual tutorials that make up the series are listed in the sidebar). This is also a good introduction for inexperienced R users.

- Get familiar with NEON sensor data flagging and data quality metrics, using aquatic instrument data as exemplar datasets.

- Use the neonOS package to join tables and flag duplicates in NEON observational data.

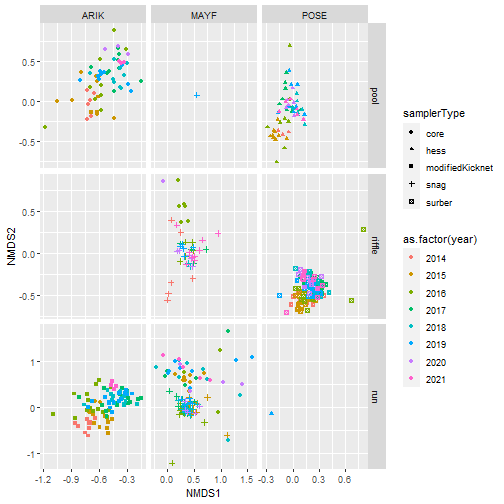

- Calculate biodiversity metrics from NEON aquatic macroinvertebrate data.

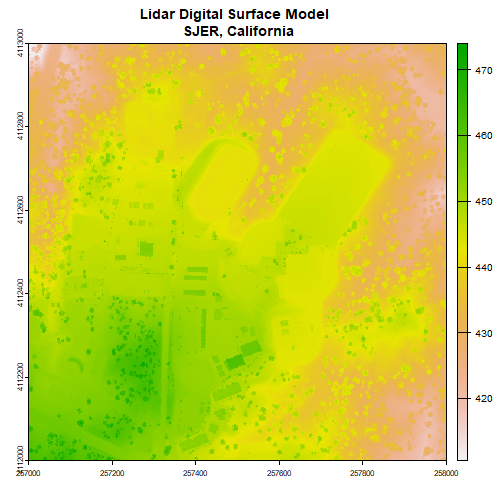

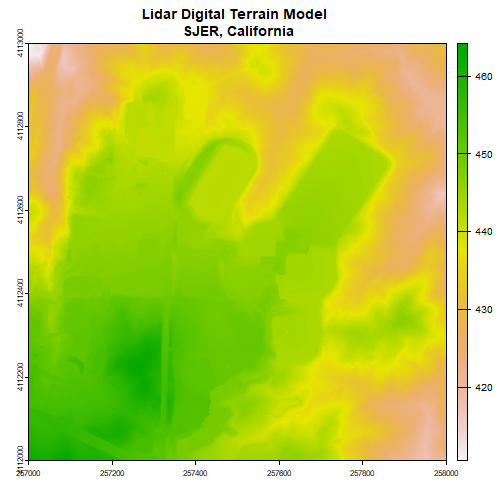

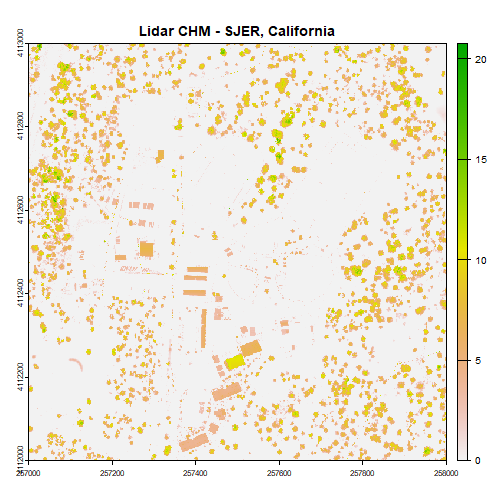

- For a quick introduction to working with remote sensing data, calculate a canopy height model from discrete return Lidar. NEON has an extensive catalog of tutorials about remote sensing principles and data; search the tutorials and tutorial series if you are interested in other topics.

- Connecting ground observations to remote sensing imagery is important to many NEON users; get familiar with the process, as well as some of the challenges of comparing these data sources, by comparing tree height observations to a canopy height model.

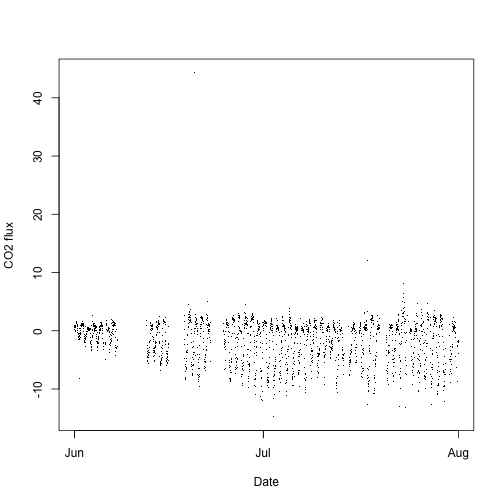

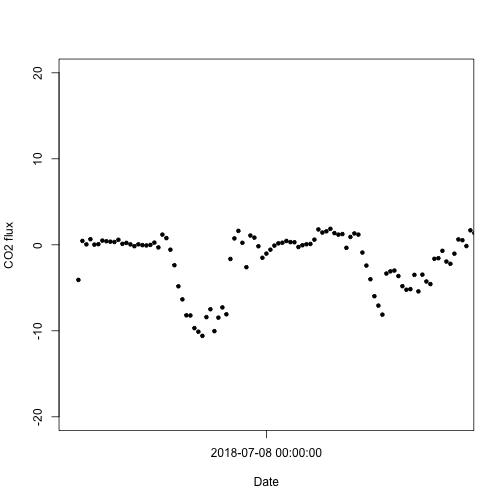

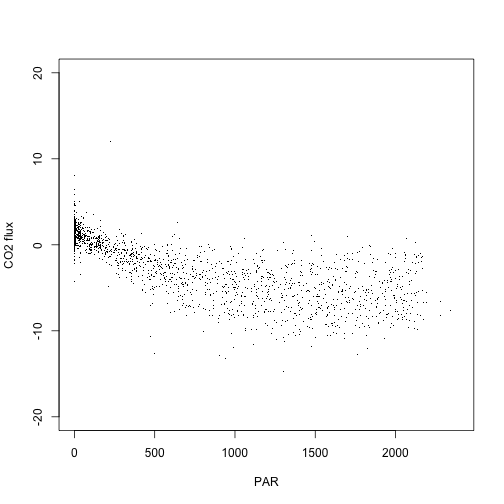

- Use the neonUtilities package to wrangle NEON surface-atmosphere exchange data (published in HDF5 format).

Download and Explore NEON Data

Authors: Claire K. Lunch

Last Updated: Feb 3, 2026

This tutorial covers downloading NEON data, using the Data Portal and either the neonUtilities R package or the neonutilities Python package, as well as basic instruction in beginning to explore and work with the downloaded data, including guidance in navigating data documentation. We will explore data of 3 different types, and make a simple figure from each.

NEON data

There are 3 basic categories of NEON data:

- Remote sensing (AOP) - Data collected by the airborne observation platform, e.g. LIDAR, surface reflectance

- Observational (OS) - Data collected by a human in the field, or in an analytical laboratory, e.g. beetle identification, foliar isotopes

- Instrumentation (IS) - Data collected by an automated, streaming sensor, e.g. net radiation, soil carbon dioxide. This category also includes the surface-atmosphere exchange (SAE) data, which are processed and structured in a unique way, distinct from other instrumentation data (see the introductory eddy flux data tutorial for details).

This lesson covers all three types of data. The download procedures are similar for all types, but data navigation differs significantly by type.

Objectives

After completing this activity, you will be able to:

- Download NEON data using the neonUtilities package.

- Understand downloaded data sets and load them into R or Python for analyses.

Things You’ll Need To Complete This Tutorial

You can follow either the R or Python code throughout this tutorial. * For R users, we recommend using R version 4+ and RStudio. * For Python users, we recommend using Python 3.9+.

Set up: Install Packages

Packages only need to be installed once, you can skip this step after the first time:

R

- neonUtilities: Basic functions for accessing NEON data

- neonOS: Functions for common data wrangling needs for NEON observational data.

- terra: Spatial data package; needed for working with remote sensing data.

install.packages("neonUtilities")

install.packages("neonOS")

install.packages("terra")Python

- neonutilities: Basic functions for accessing NEON data

- rasterio: Spatial data package; needed for working with remote sensing data.

pip install neonutilities

pip install rasterioAdditional Resources

- GitHub repository for neonUtilities R package

- GitHub repository for neonutilities Python package

- neonUtilities cheat sheet. A quick reference guide for users. Focuses on the R package, but applicable to Python as well.

Set up: Load packages

R

library(neonUtilities)

library(neonOS)

library(terra)Python

import neonutilities as nu

import os

import rasterio

import pandas as pd

import matplotlib.pyplot as pltGetting started: Download data from the Portal

Go to the NEON Data Portal and download some data! To follow the tutorial exactly, download Photosynthetically active radiation (PAR) (DP1.00024.001) data from September-November 2019 at Wind River Experimental Forest (WREF). The downloaded file should be a zip file named NEON_par.zip.

If you prefer to explore a different data product, you can still follow this tutorial. But it will be easier to understand the steps in the tutorial, particularly the data navigation, if you choose a sensor data product for this section.

Once you’ve downloaded a zip file of data from the portal, switch over to R or Python to proceed with coding.

Stack the downloaded data files: stackByTable()

The stackByTable() (or stack_by_table())

function will unzip and join the files in the downloaded zip file.

R

# Modify the file path to match the path to your zip file

stackByTable("~/Downloads/NEON_par.zip")Python

# Modify the file path to match the path to your zip file

nu.stack_by_table(os.path.expanduser("~/Downloads/NEON_par.zip"))In the directory where the zipped file was saved, you should now have an unzipped folder of the same name. When you open this you will see a new folder called stackedFiles, which should contain at least seven files: PARPAR_30min.csv, PARPAR_1min.csv, sensor_positions.csv, variables_00024.csv, readme_00024.txt, issueLog_00024.csv, and citation_00024_RELEASE-202X.txt.

Navigate data downloads: IS

Let’s start with a brief description of each file. This set of files is typical of a NEON IS data product.

- PARPAR_30min.csv: PAR data at 30-minute averaging intervals

- PARPAR_1min.csv: PAR data at 1-minute averaging intervals

- sensor_positions.csv: The physical location of each sensor collecting PAR measurements. There is a PAR sensor at each level of the WREF tower, and this table lets you connect the tower level index to the height of the sensor in meters.

- variables_00024.csv: Definitions and units for each data field in the PARPAR_#min tables.

- readme_00024.txt: Basic information about the PAR data product.

- issueLog_00024.csv: A record of known issues associated with PAR data.

- citation_00024_RELEASE-202X.txt: The citation to use when you publish a paper using these data, in BibTeX format.

We’ll explore the 30-minute data. To read the file, use the function

readTableNEON() or read_table_neon(), which

uses the variables file to assign data types to each column of data:

R

par30 <- readTableNEON(

dataFile="~/Downloads/NEON_par_R/stackedFiles/PARPAR_30min.csv",

varFile="~/Downloads/NEON_par_R/stackedFiles/variables_00024.csv")

head(par30)par30 <- readTableNEON(

dataFile="~/Downloads/NEON_par/stackedFiles/PARPAR_30min.csv",

varFile="~/Downloads/NEON_par/stackedFiles/variables_00024.csv")

head(par30)Python

par30 = nu.read_table_neon(

data_file=os.path.expanduser(

"~/Downloads/NEON_par/stackedFiles/PARPAR_30min.csv"),

var_file=os.path.expanduser(

"~/Downloads/NEON_par/stackedFiles/variables_00024.csv"))

# Open the par30 table in the table viewer of your choiceThe first four columns are added by stackByTable() when

it merges files across sites, months, and tower heights. The column

publicationDate is the date-time stamp indicating when the

data were published, and the release column indicates which

NEON data release the data belong to. For more information about NEON

data releases, see the

Data

Product Revisions and Releases page.

Information about each data column can be found in the variables file, where you can see definitions and units for each column of data.

Plot PAR data

Now that we know what we’re looking at, let’s plot PAR from the top tower level. We’ll use the mean PAR from each averaging interval, and we can see from the sensor positions file that the vertical index 080 corresponds to the highest tower level. To explore the sensor positions data in more depth, see the spatial data tutorial.

R

plot(PARMean~endDateTime,

data=par30[which(par30$verticalPosition=="080"),],

type="l")Python

par30top = par30[par30.verticalPosition=="080"]

fig, ax = plt.subplots()

ax.plot(par30top.endDateTime, par30top.PARMean)

plt.show()Looks good! The sun comes up and goes down every day, and some days are cloudy.

Plot more PAR data

To see another layer of data, add PAR from a lower tower level to the plot.

R

plot(PARMean~endDateTime,

data=par30[which(par30$verticalPosition=="080"),],

type="l")

lines(PARMean~endDateTime,

data=par30[which(par30$verticalPosition=="020"),],

col="orange")

Python

par30low = par30[par30.verticalPosition=="020"]

fig, ax = plt.subplots()

ax.plot(par30top.endDateTime, par30top.PARMean)

ax.plot(par30low.endDateTime, par30low.PARMean)

plt.show()We can see there is a lot of light attenuation through the canopy.

Download files and load directly to R: loadByProduct()

At the start of this tutorial, we downloaded data from the NEON data

portal. NEON also provides an API, and the neonUtilities

packages provide methods for downloading programmatically.

The steps we carried out above - downloading from the portal,

stacking the downloaded files, and reading in to R or Python - can all

be carried out in one step by the neonUtilities function

loadByProduct().

To get the same PAR data we worked with above, we would run this line

of code using loadByProduct():

R

parlist <- loadByProduct(dpID="DP1.00024.001",

site="WREF",

startdate="2019-09",

enddate="2019-11")Python

parlist = nu.load_by_product(dpid="DP1.00024.001",

site="WREF",

startdate="2019-09",

enddate="2019-11")Explore loaded data

The object returned by loadByProduct() in R is a named

list, and the object returned by load_by_product() in

Python is a dictionary. The objects contained in the list or dictionary

are the same set of tables we ended with after stacking the data from

the portal above. You can see this by checking the names of the tables

in parlist:

R

names(parlist)## [1] "citation_00024_RELEASE-2024" "issueLog_00024"

## [3] "PARPAR_1min" "PARPAR_30min"

## [5] "readme_00024" "sensor_positions_00024"

## [7] "variables_00024"Python

parlist.keys()## dict_keys(['PARPAR_1min', 'PARPAR_30min', 'citation_00024_RELEASE-2024', 'issueLog_00024', 'readme_00024', 'sensor_positions_00024', 'variables_00024'])Now let’s walk through the details of the inputs and options in

loadByProduct().

This function downloads data from the NEON API, merges the site-by-month files, and loads the resulting data tables into the programming environment, assigning each data type to the appropriate class. This is a popular choice for NEON data users because it ensures you’re always working with the latest data, and it ends with ready-to-use tables. However, if you use it in a workflow you run repeatedly, keep in mind it will re-download the data every time. See below for suggestions on saving the data locally to save time and compute resources.

loadByProduct() works on most observational (OS) and

sensor (IS) data, but not on surface-atmosphere exchange (SAE) data and remote sensing (AOP) data. For functions that download AOP data, see the final

section in this tutorial. For functions that work with SAE data, see the

NEON

eddy flux data tutorial.

The inputs to loadByProduct() control which data to

download and how to manage the processing. The list below shows the R syntax; in Python,

the inputs are the same but all lowercase (e.g. `dpid` instead of `dpID`)

and `.` is replaced by `_`.

dpID: the data product ID, e.g. DP1.00002.001site: defaults to “all”, meaning all sites with available data; can be a vector of 4-letter NEON site codes, e.g.c("HARV","CPER","ABBY")(or["HARV","CPER","ABBY"]in Python)startdateandenddate: defaults to NA, meaning all dates with available data; or a date in the form YYYY-MM, e.g. 2017-06. Since NEON data are provided in month packages, finer scale querying is not available. Both start and end date are inclusive.package: either basic or expanded data package. Expanded data packages generally include additional information about data quality, such as chemical standards and quality flags. Not every data product has an expanded package; if the expanded package is requested but there isn’t one, the basic package will be downloaded.timeIndex: defaults to “all”, to download all data; or the number of minutes in the averaging interval. Only applicable to IS data.release: Specify a NEON data release to download. Defaults to the most recent release plus provisional data. See the release tutorial for more information.include.provisional: T or F: should Provisional data be included in the download? Defaults to F to return only Released data, which are citable by a DOI and do not change over time. Provisional data are subject to change.check.size: T or F: should the function pause before downloading data and warn you about the size of your download? Defaults to T; if you are using this function within a script or batch process you will want to set it to F.token: Optional NEON API token for faster downloads. See this tutorial for instructions on using a token.progress: Set to F to turn off progress bars.cloud.mode: Can be set to T if you are working in a cloud environment; enables more efficient data transfer from NEON’s cloud storage.

The dpID is the data product identifier of the data you

want to download. The DPID can be found on the

Explore Data Products page. It will be in the form DP#.#####.###

Download observational data

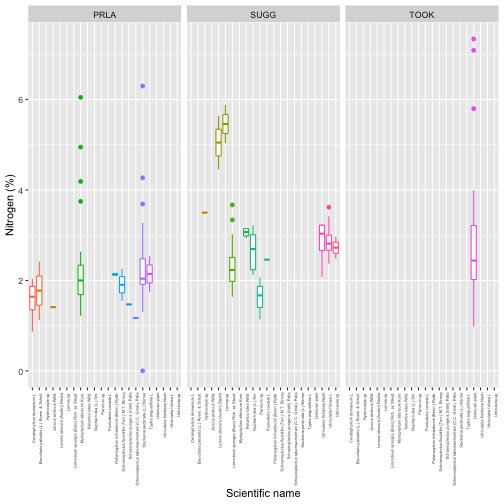

To explore observational data, we’ll download aquatic plant chemistry data (DP1.20063.001) from three lake sites: Prairie Lake (PRLA), Suggs Lake (SUGG), and Toolik Lake (TOOK).

R

apchem <- loadByProduct(dpID="DP1.20063.001",

site=c("PRLA","SUGG","TOOK"),

package="expanded",

release="RELEASE-2024",

check.size=F)Python

apchem = nu.load_by_product(dpid="DP1.20063.001",

site=["PRLA", "SUGG", "TOOK"],

package="expanded",

release="RELEASE-2024",

check_size=False)Navigate data downloads: OS

As we saw above, the object returned by loadByProduct()

is a named list of data frames. Let’s check out what’s the same and

what’s different from the IS data tables.

R

names(apchem)## [1] "apl_biomass" "apl_clipHarvest"

## [3] "apl_plantExternalLabDataPerSample" "apl_plantExternalLabQA"

## [5] "asi_externalLabPOMSummaryData" "categoricalCodes_20063"

## [7] "citation_20063_RELEASE-2024" "issueLog_20063"

## [9] "readme_20063" "validation_20063"

## [11] "variables_20063"Python

apchem.keys()## dict_keys(['apl_biomass', 'apl_clipHarvest', 'apl_plantExternalLabDataPerSample', 'apl_plantExternalLabQA', 'asi_externalLabPOMSummaryData', 'categoricalCodes_20063', 'citation_20063_RELEASE-2024', 'issueLog_20063', 'readme_20063', 'validation_20063', 'variables_20063'])Explore tables

As with the sensor data, we have some data tables and some metadata tables. Most of the metadata files are the same as the sensor data: readme, variables, issueLog, and citation. These files contain the same type of metadata here that they did in the IS data product. Let’s look at the other files:

- apl_clipHarvest: Data from the clip harvest collection of aquatic plants

- apl_biomass: Biomass data from the collected plants

- apl_plantExternalLabDataPerSample: Chemistry data from the collected plants

- apl_plantExternalLabQA: Quality assurance data from the chemistry analyses

- asi_externalLabPOMSummaryData: Quality metrics from the chemistry lab

- validation_20063: For observational data, a major method for ensuring data quality is to control data entry. This file contains information about the data ingest rules applied to each input data field.

- categoricalCodes_20063: Definitions of each value for categorical data, such as growth form and sample condition

You can work with these tables from the named list object, but many

people find it easier to extract each table from the list and work with

it as an independent object. To do this, use the list2env()

function in R or globals().update() in Python:

R

list2env(apchem, .GlobalEnv)## <environment: R_GlobalEnv>Python

globals().update(apchem)Save data locally

Keep in mind that using loadByProduct() will re-download

the data every time you run your code. In some cases this may be

desirable, but it can be a waste of time and compute resources. To come

back to these data without re-downloading, you’ll want to save the

tables locally. The most efficient option is to save the named list in

total - this will also preserve the data types.

R

saveRDS(apchem,

"~/Downloads/aqu_plant_chem.rds")Python

# There are a variety of ways to do this in Python; NEON

# doesn't currently have a specific recommendation. If

# you don't have a data-saving workflow you already use,

# we suggest you check out the pickle module.

Then you can re-load the object to a programming environment any time.

Other options for saving data locally:

- Similar to the workflow we started this tutorial with, but using

neonUtilitiesto download instead of the Portal: UsezipsByProduct()andstackByTable()instead ofloadByProduct(). With this option, use the functionreadTableNEON()to read the files, to get the same column type assignment thatloadByProduct()carries out. Details can be found in our neonUtilities tutorial. - Try out the community-developed

neonstorepackage, which is designed for maintaining a local store of the NEON data you use. TheneonUtilitiesfunctionstackFromStore()works with files downloaded byneonstore. See the neonstore tutorial for more information.

Now let’s explore the aquatic plant data. OS data products are simple in that the data are generally tabular, and data volumes are lower than the other NEON data types, but they are complex in that almost all consist of multiple tables containing information collected at different times in different ways. For example, samples collected in the field may be shipped to a laboratory for analysis. Data associated with the field collection will appear in one data table, and the analytical results will appear in another. Complexity in working with OS data usually involves bringing data together from multiple measurements or scales of analysis.

As with the IS data, the variables file can tell you more about the data tables.

OS data products each come with a Data Product User Guide, which can be downloaded with the data, or accessed from the document library on the Data Portal, or the Product Details page for the data product. The User Guide is designed to give a basic introduction to the data product, including a brief summary of the protocol and descriptions of data format and structure.

Explore isotope data

To get started with the aquatic plant chemistry data, let’s take a

look at carbon isotope ratios in plants across the three sites we

downloaded. The chemical analytes are reported in the

apl_plantExternalLabDataPerSample table, and the table is

in long format, with one record per sample per analyte, so we’ll subset

to only the carbon isotope analyte:

R

boxplot(analyteConcentration~siteID,

data=apl_plantExternalLabDataPerSample,

subset=analyte=="d13C",

xlab="Site", ylab="d13C")Python

apl13C = apl_plantExternalLabDataPerSample[

apl_plantExternalLabDataPerSample.analyte=="d13C"]

grouped = apl13C.groupby("siteID")["analyteConcentration"]

fig, ax = plt.subplots()

ax.boxplot(x=[group.values for name, group in grouped],

tick_labels=grouped.groups.keys())plt.show()We see plants at Suggs and Toolik are quite low in 13C, with more

spread at Toolik than Suggs, and plants at Prairie Lake are relatively

enriched. Clearly the next question is what species these data

represent. But taxonomic data aren’t present in the

apl_plantExternalLabDataPerSample table, they’re in the

apl_biomass table. We’ll need to join the two tables to get

chemistry by taxon.

Every NEON data product has a Quick Start Guide (QSG), and for OS

products it includes a section describing how to join the tables in the

data product. Since it’s a pdf file, loadByProduct()

doesn’t bring it in, but you can view the Aquatic plant chemistry QSG on

the

Product

Details page. In R, the neonOS package uses the information

from the QSGs to provide an automated table-joining function,

joinTableNEON().

Explore isotope data by species

R

apct <- joinTableNEON(apl_biomass,

apl_plantExternalLabDataPerSample)Using the merged data, now we can plot carbon isotope ratio for each taxon.

boxplot(analyteConcentration~scientificName,

data=apct, subset=analyte=="d13C",

xlab=NA, ylab="d13C",

las=2, cex.axis=0.7)Python

There is not yet an equivalent to the neonOS package in

Python, so we will code the table join manually, based on the info in

the Quick Start Guide:

apct = pd.merge(apl_biomass,

apl_plantExternalLabDataPerSample,

left_on=["siteID", "chemSubsampleID"],

right_on=["siteID", "sampleID"],

how="outer")Using the merged data, now we can plot carbon isotope ratio for each taxon.

apl13Cspp = apct[apct.analyte=="d13C"]

grouped = apl13Cspp.groupby("scientificName")["analyteConcentration"]

fig, ax = plt.subplots()

ax.boxplot(x=[group.values for name, group in grouped],

tick_labels=grouped.groups.keys())ax.tick_params(axis='x', labelrotation=90)

plt.show()And now we can see most of the sampled plants have carbon isotope ratios around -30, with just a few species accounting for most of the more enriched samples.

Download remote sensing data: byFileAOP() and byTileAOP()

Remote sensing data files are very large, so downloading them can

take a long time. byFileAOP() and byTileAOP()

enable easier programmatic downloads, but be aware it can take a very

long time to download large amounts of data.

Input options for the AOP functions are:

dpID: the data product ID, e.g. DP1.00002.001site: the 4-letter code of a single site, e.g. HARVyear: the 4-digit year to downloadsavepath: the file path you want to download to; defaults to the working directorycheck.size: T or F: should the function pause before downloading data and warn you about the size of your download? Defaults to T; if you are using this function within a script or batch process you will want to set it to F.easting:byTileAOP()only. Vector of easting UTM coordinates whose corresponding tiles you want to downloadnorthing:byTileAOP()only. Vector of northing UTM coordinates whose corresponding tiles you want to downloadbuffer:byTileAOP()only. Size in meters of buffer to include around coordinates when deciding which tiles to downloadtoken: Optional NEON API token for faster downloads.chunk_size: Only in Python. Set the chunk size of chunked downloads, can improve efficiency in some cases. Defaults to 1 MB.

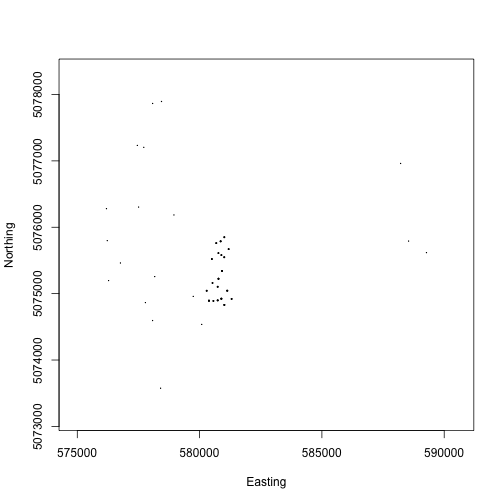

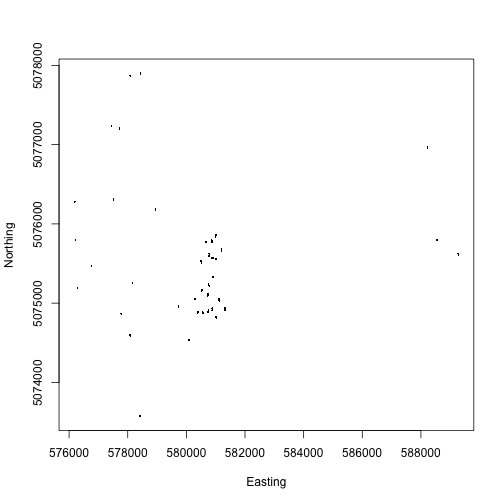

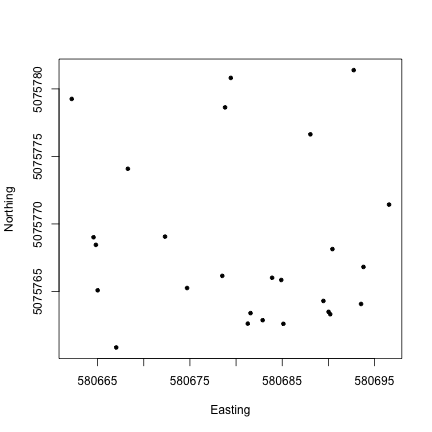

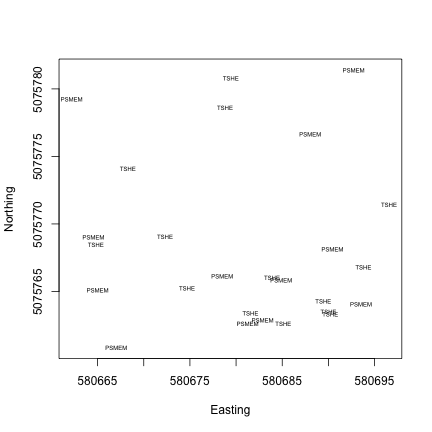

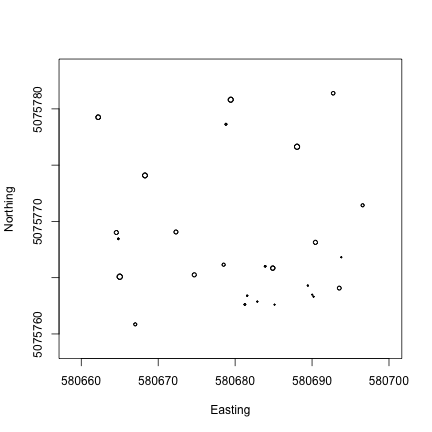

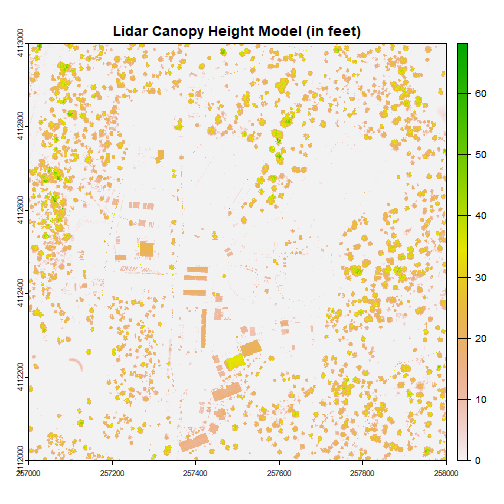

Here, we’ll download one tile of Ecosystem structure (Canopy Height Model) (DP3.30015.001) from WREF in 2017.

R

byTileAOP(dpID="DP3.30015.001", site="WREF",

year=2017,easting=580000,

northing=5075000,

savepath="~/Downloads")Python

nu.by_tile_aop(dpid="DP3.30015.001", site="WREF",

year=2017,easting=580000,

northing=5075000,

savepath=os.path.expanduser(

"~/Downloads"))In the directory indicated in savepath, you should now

have a folder named DP3.30015.001 with several nested

subfolders, leading to a tif file of a canopy height model tile.

Navigate data downloads: AOP

To work with AOP data, the best bet in R is the terra

package. It has functionality for most analyses you might want to do. In

Python, we’ll use the rasterio package here; explore NEON remote sensing

tutorials for more guidance.

First let’s read in the tile we downloaded:

R

chm <- rast("~/Downloads/DP3.30015.001/neon-aop-products/2017/FullSite/D16/2017_WREF_1/L3/DiscreteLidar/CanopyHeightModelGtif/NEON_D16_WREF_DP3_580000_5075000_CHM.tif")Python

chm = rasterio.open(os.path.expanduser("~/Downloads/DP3.30015.001/neon-aop-products/2017/FullSite/D16/2017_WREF_1/L3/DiscreteLidar/CanopyHeightModelGtif/NEON_D16_WREF_DP3_580000_5075000_CHM.tif"))Plot canopy height model

R

plot(chm, col=topo.colors(6))Python

plt.imshow(chm.read(1))

plt.show()Now we can see canopy height across the downloaded tile; the tallest trees are over 60 meters, not surprising in the Pacific Northwest. There is a clearing or clear cut in the lower right quadrant.

Next steps

Now that you’ve learned the basics of downloading and understanding NEON data, where should you go to learn more? There are many more NEON tutorials to explore, including how to align remote sensing and ground-based measurements, a deep dive into the data quality flagging in the sensor data products, and much more. For a recommended suite of tutorials for new users, check out the Getting Started with NEON Data tutorial series.

Using an API Token when Accessing NEON Data with neonUtilities

Authors: [Claire K. Lunch]

Last Updated: Jun 18, 2025

NEON data can be downloaded from either the NEON Data Portal or the NEON API. When downloading from the Data Portal, you can create a user account. Read about the benefits of an account on the User Account page. You can also use your account to create a token for using the API. Your token is unique to your account, so don’t share it.

Using a token is optional! You can download data without a token, and without a user account. Using a token when downloading data via the API, including when using the neonUtilities package, links your downloads to your user account, as well as enabling faster download speeds. For more information about token usage and benefits, see the NEON API documentation page.

For now, in addition to faster downloads, using a token helps NEON to track data downloads. Using anonymized user information, we can then calculate data access statistics, such as which data products are downloaded most frequently, which data products are downloaded in groups by the same users, and how many users in total are downloading data. This information helps NEON to evaluate the growth and reach of the observatory, and to advocate for training activities, workshops, and software development.

Tokens can be used whenever you use the NEON API. In this tutorial, we’ll focus on using tokens with the neonUtilities R package and the neonutilities Python package. You can follow the tutorial using your preferred programming language.

Objectives

After completing this activity, you will be able to:

- Create a NEON API token

- Use your token when downloading data with neonUtilities

Things You’ll Need To Complete This Tutorial

A recent version of R (version 4+) or Python (3.9+) installed on your computer.

Install and Load Packages

R

Install the neonUtilities package. You can skip this step if it’s already installed, but remember to update regularly.

install.packages("neonUtilities")Load the package.

library(neonUtilities)Python

Install the neonutilities package. You can skip this step if it’s already installed, but remember to update regularly.

# do this in the command line

pip install neonutilitiesLoad the package.

import neonutilities as nu

import osAdditional Resources

If you’ve never downloaded NEON data using the neonUtilities package before, we recommend starting with the Download and Explore tutorial before proceeding with this tutorial.

In the next sections, we’ll get an API token from the NEON Data Portal, and then use it in neonUtilities when downloading data.

Get a NEON API Token

The first step is create a NEON user account, if you don’t have one. Follow the instructions on the Data Portal User Accounts page. If you do already have an account, go to the NEON Data Portal, sign in, and go to your My Account profile page.

Once you have an account, you can create an API token for yourself. At the bottom of the My Account page, you should see this bar:

Click the ‘GET API TOKEN’ button. After a moment, you should see this:

Click on the Copy button to copy your API token to the clipboard:

Use API token in neonUtilities

In the next section, we’ll walk through saving your token somewhere secure but accessible to your code. But first let’s try out using the token the easy way, by using it as a simple text string.

NEON API tokens are very long, so it would be annoying to keep pasting the entire string into functions. Assign your token an object name:

R

NEON_TOKEN <- "PASTE YOUR TOKEN HERE"Now we’ll use the loadByProduct() function to download

data. Your API token is entered as the optional token input

parameter. For this example, we’ll download Plant foliar traits

(DP1.10026.001).

foliar <- loadByProduct(dpID="DP1.10026.001", site="all",

package="expanded", check.size=F,

token=NEON_TOKEN)Python

NEON_TOKEN = "PASTE YOUR TOKEN HERE"Now we’ll use the load_by_product() function to download

data. Your API token is entered as the optional token input

parameter. For this example, we’ll download Plant foliar traits

(DP1.10026.001).

foliar = nu.load_by_product(dpid="DP1.10026.001", site="all",

package="expanded", check_size=False,

token=NEON_TOKEN)You should now have data saved in the foliar object; the

API silently used your token. If you’ve downloaded data without a token

before, you may notice this is faster!

This format applies to all neonUtilities functions that

involve downloading data or otherwise accessing the API; you can use the

token input with all of them. For example, when downloading

remote sensing data:

Use token to download AOP data

R

chm <- byTileAOP(dpID="DP3.30015.001", site="WREF",

year=2017, check.size=F,

easting=c(571000,578000),

northing=c(5079000,5080000),

savepath=getwd(),

token=NEON_TOKEN)Python

chm = nu.by_tile_aop(dpid="DP3.30015.001", site="WREF",

year=2017, check_size=False,

easting=[571000,578000],

northing=[5079000,5080000],

savepath=os.getcwd(),

token=NEON_TOKEN)Token management for open code

Your API token is unique to your account, so don’t share it!

If you’re writing code that will be shared with colleagues or

available publicly, such as in a GitHub repository or supplemental

materials of a published paper, you can’t include the line of code above

where we assigned your token to NEON_TOKEN, since your

token is fully visible in the code there. Instead, you’ll need to save

your token locally on your computer, and pull it into your code without

displaying it. There are a few ways to do this, we’ll show two options

here.

Option 1: Save the token in a local file, and

source()(R) orimport(Python) that file at the start of every script. This is fairly simple but requires a line of code in every script.Option 2: Set the token as an environment variable and you can access it from any script. This is a little harder to set up initially, but once it’s done, it’s done globally, and it will work in every script you run.

Option 1: Save token in a local file

R

Open a new, empty R script (.R). Put a single line of code in the script:

NEON_TOKEN <- "PASTE YOUR TOKEN HERE"Save this file in a logical place on your machine, somewhere that

won’t be visible publicly. Here, let’s call the file

neon_token_source.R, and save it to the working directory.

Then, at the start of every script where you’re going to use the NEON

API, you would run this line of code:

source(paste0(wd, "/neon_token_source.R"))Now you can use token=NEON_TOKEN when you run

neonUtilities functions, and you can share your code

without accidentally sharing your token.

Python

Open a new, empty Python script (.py). Put a single line of code in the script:

NEON_TOKEN = "PASTE YOUR TOKEN HERE"Save this file in a logical place on your machine, somewhere that

won’t be visible publicly. Here, let’s call the file

neon_token_source.py, and save it to the working directory.

Then, at the start of every script where you’re going to use the NEON

API, you would run this line of code:

import neon_token_sourceNow you can use token=neon_token_source.NEON_TOKEN when

you run neonutilities functions, and you can share your

code without accidentally sharing your token.

Option 2: Set token as environment variable

R

To create a persistent environment variable in R, we use a

.Renviron file. Before creating a file, check which

directory R is using as your home directory:

# For Windows:

Sys.getenv("R_USER")

For Mac/Linux:

Sys.getenv("HOME")

Check the home directory to see if you already have a

.Renviron file, using the file browse pane in

RStudio, or using another file browse method with hidden files

shown. Files that begin with . are hidden by default, but

RStudio recognizes files that begin with .R and displays

them.

If you already have a .Renviron file, open it and follow

the instructions below to add to it. If you don’t have one, create one

using File -> New File -> Text File in the RStudio menus.

Add one line to the text file. In this case, there are no quotes around the token value.

NEON_TOKEN=PASTE YOUR TOKEN HERESave the file as .Renviron, in the RStudio home

directory identified above. Double check the spelling, this will not

work if you have a typo. Re-start R to load the environment.

Once your token is assigned to an environment variable, use the

function Sys.getenv() to access it. For example, in

loadByProduct():

foliar <- loadByProduct(dpID="DP1.10026.001", site="all",

package="expanded", check.size=F,

token=Sys.getenv("NEON_TOKEN"))Python

To create a persistent environment variable in Python, the simplest

option is to use the dotenv module. You will still need to

load the variables in each script, but it provides a more flexible way

to manage enrionment variables.

pip install python-dotenvCreate a file named .env in the project folder. If

you’re using GitHub, make sure .env is in your

.gitignore to avoid syncing tokens to GitHub.

To add variables to the .env file:

import dotenv

dotenv.set_key(dotenv_path=".env",

key_to_set="NEON_TOKEN",

value_to_set="YOUR TOKEN HERE")

Use the command dotenv.load_dotenv() to load environment

variables to the session, then use os.environ.get() to

access particular variables. For example, in

load_by_product():

dotenv.load_dotenv()foliar = nu.load_by_product(dpid="DP1.10026.001", site="all",

package="expanded", check_size=False,

token=os.environ.get("NEON_TOKEN"))If dotenv.load_dotenv() returns False, the

variables did not load. Try

dotenv.load_dotenv(dotenv.find_dotenv(usecwd=True)).

Get Lesson Code

Understanding Releases and Provisional Data

Authors: Claire K. Lunch

Last Updated: Jan 14, 2025

What is a data release?

A NEON data Release is a fixed set of data that does not change over time. Each data product in a Release is associated with a unique Digital Object Identifier (DOI) that can be used for data citation. Because the data in a Release do not change, analyses performed on those data are traceable and reproducible.

NEON data are initially published under a Provisional status, meaning that data may be updated on an as-needed basis, without guarantee of reproducibility. Publishing Provisional data allows NEON to publish data rapidly while retaining the ability to make corrections or additions as they are identified.

After initial publication, a lag time occurs before the data are formally Released. During this lag time, extra quality control (QC) procedures, which are described in data product-specific documentation, may be performed. This lag time also ensures all data from laboratory analyses that complement existing field data are available before a data Release.

Although data within a Release do not change, NEON may discover errors or needed updates to data after the publication of a Release. For this reason, NEON generates a Release annually; each Release represents the best data available at the time of publication. Changes to data between Releases are documented on the web page for each Release and in the issue log for each data product.

Data citation

Each data product within a Release is associated with a DOI for reference and citation. DOI URLs will always resolve back to their corresponding data product Release’s landing webpage and are thus ideal for citing NEON data in publications and applications.

For more details about NEON data Releases, see the Data Product Revisions and Releases web page.

Objectives

After completing this activity, you will be able to:

- Download data from a specific NEON data Release

- Download Provisional NEON data

- Use appropriate citation information for both Released and Provisional data

Things You’ll Need To Complete This Tutorial

Most of this tutorial can be completed with nothing but an internet browser, without writing any code. You can learn about Releases and Provisional data and explore them on the Data Portal, and view figures from the data downloads.

To complete the full tutorial, including the coding sections, you will need a version of R (4.0 or higher) and, preferably, RStudio loaded on your computer. This code may work with earlier versions but it hasn't been tested.

Install R Packages

-

neonUtilities:

install.packages("neonUtilities")

Additional Resources

- NEON Data Portal

- NEON Code Hub

Set Up R Environment

First install and load the necessary packages.

# install packages. can skip this step if

# the packages are already installed

install.packages("neonUtilities")

# load packages

library(neonUtilities)

library(ggplot2)

# set working directory

# modify for your computer

setwd("~/data")

Find data of interest

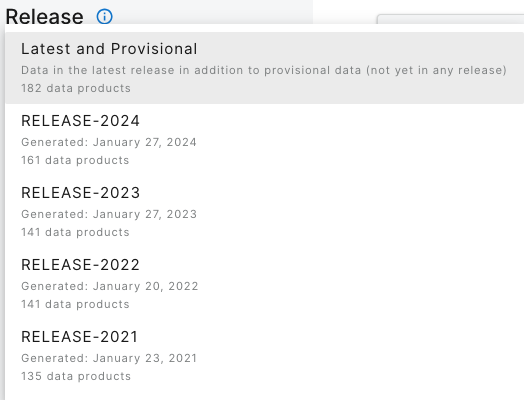

We'll start with the Explore Data Products page on the NEON Data Portal, which has visual interfaces to allow you to select a particular Release, and show you which data are included in it.

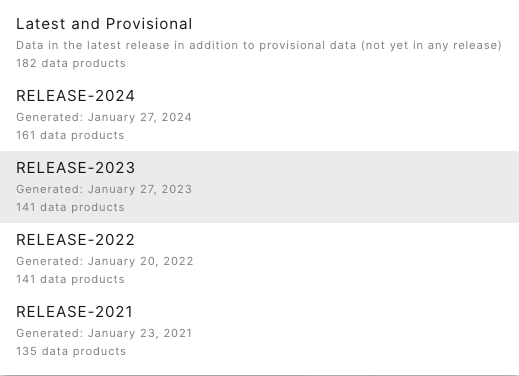

On the lefthand menu bar, the dropdown menu of Releases shows the available releases and the default display, which is the latest Release plus Provisional data.

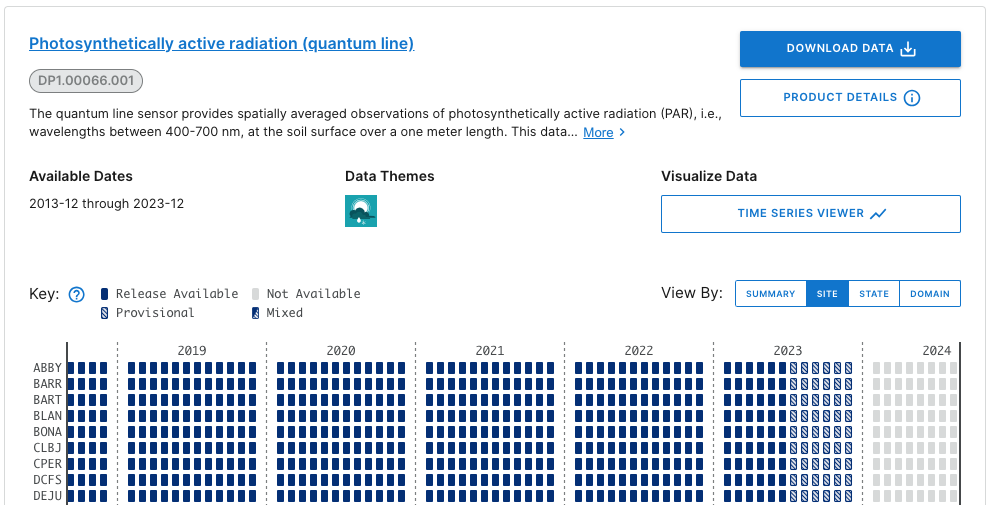

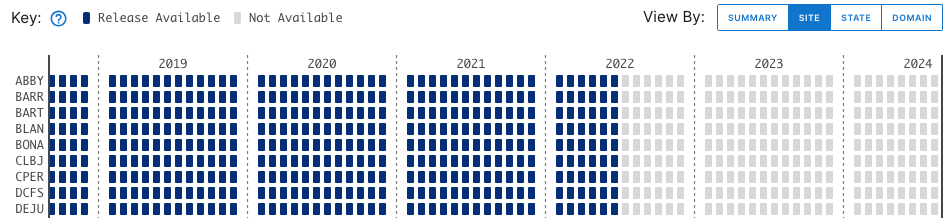

Stay on the default menu option for now. Navigate to quantum line PAR, DP1.00066.001. Expand the data availability chart by clicking on View By: SITE.

What you see will probably not look exactly like this, but similar. This is a screenshot from January 2024; more data and possibly more Releases may have been published by the time you follow this tutorial.

Here we can consult the key and see that data up to June 2023 are in a Release (solid boxes) and data collected since June 2023 are Provisional (hatched boxes). There are no 2024 data available yet (pale grey boxes).

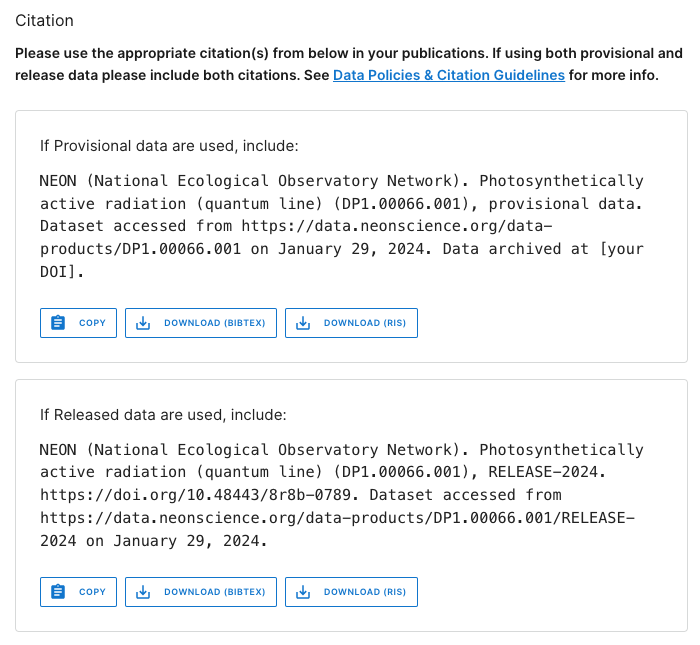

Now click on the Product Details button to go to the DP1.00066.001 web page.

This page contains details and documentation about the data product, including citation information for publications using these data.

Note that the citation guidance is different for Provisional and Released data. The Release citation includes a direct link to a DOI. Since Provisional data are subject to change without notice, the data you download today may not exactly match data you download tomorrow. Because of this, the recommended workflow is to archive the version of Provisional data you used in your analyses, and provide a DOI to that archived version for citation. Guidance in doing this is available on the Publishing Research Outputs web page.

Downloading data

Latest and provisional

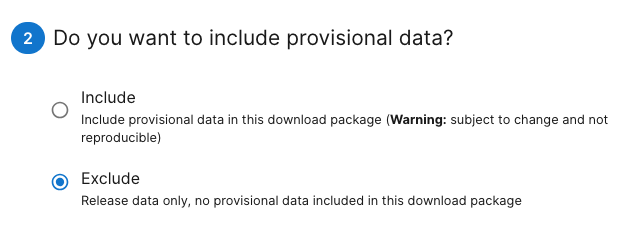

Go back to the main Explore Data Products page and click on Download Data. Select BARR (Utquiagvik) and BONA (Caribou Creek) for the year of 2023. Click Next.

By default, the download options are set to access the latest Release and exclude Provisional data, even if they are available in the sites and dates you selected. To download Provisional data, select the radio button for Include in the interface.

Download by Release

But let's say you're not looking for the most recently updated data. You're replicating a colleague's analysis, and want to download the precise version of data they used. In that case, you need to download the Release they used.

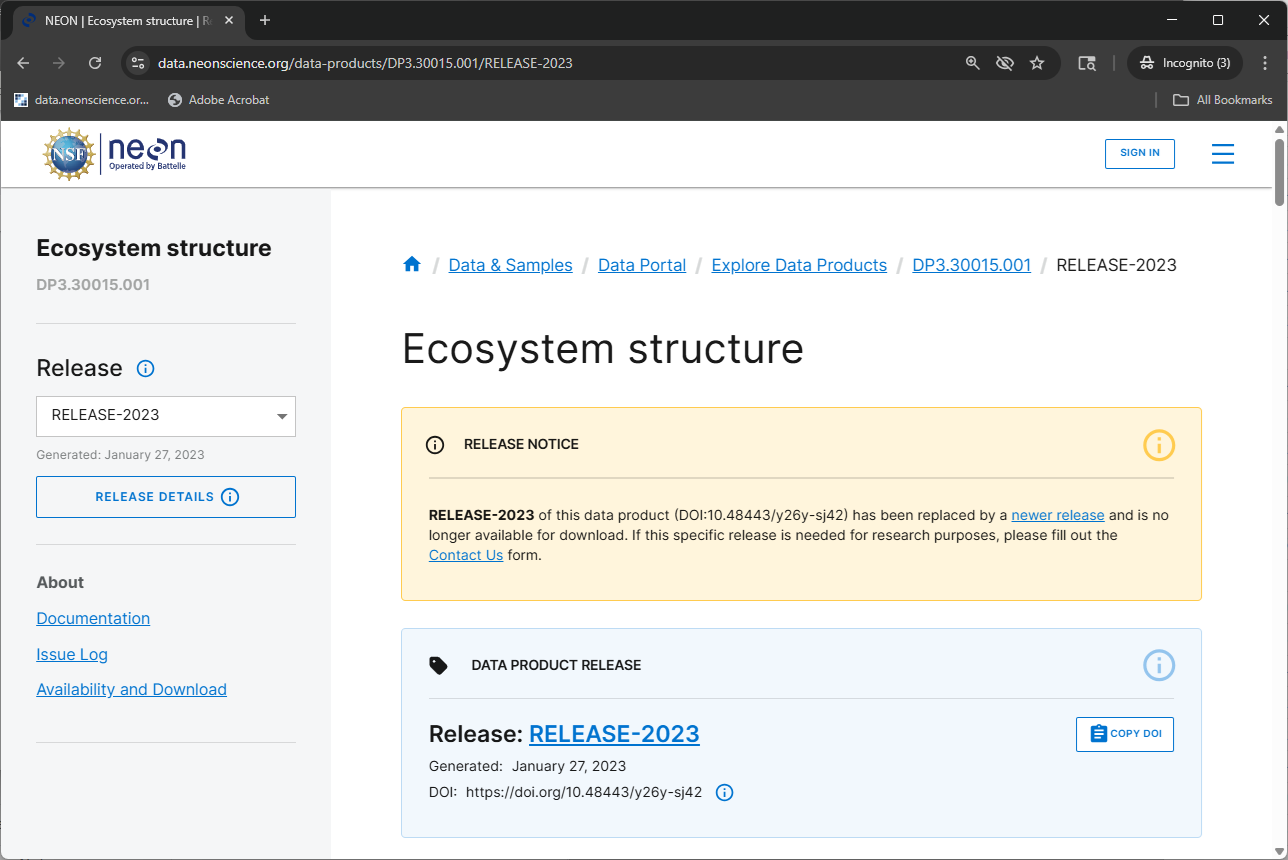

Go back to the Explore Data Products page, and select the Release you need from the Release menu on the lefthand bar. For this example, let's use RELEASE-2023.

Now, in the data availability chart, we can see there are no hatched boxes, since we've selected only data that are in a Release. And data availability extends only through June 2022, the end date for sensor data in RELEASE-2023.

Downloading data using neonUtilities

NEON data can also be downloaded in R, using the neonUtilities package. If

you're not familiar with the neonUtilities package and how to use

it to access NEON data, we recommend you follow the Download and Explore NEON Data

tutorial as well as this one, for a more complete introduction.

Downloading the latest Release and Provisional

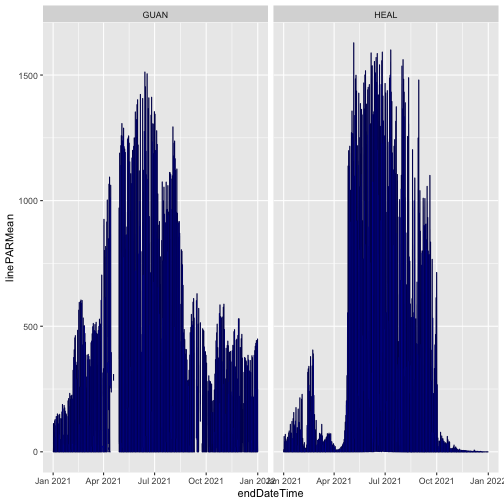

Let's download a full year of data for two sites, as we did on the Data Portal above. Here we'll download data from HEAL (Healy) and GUAN (Guanica), January 2023 - December 2023.

(Note: To see the code behavior below, if you are following this tutorial in 2025 or later, you may need to adjust the dates. In general, use the most recent full year of data.)

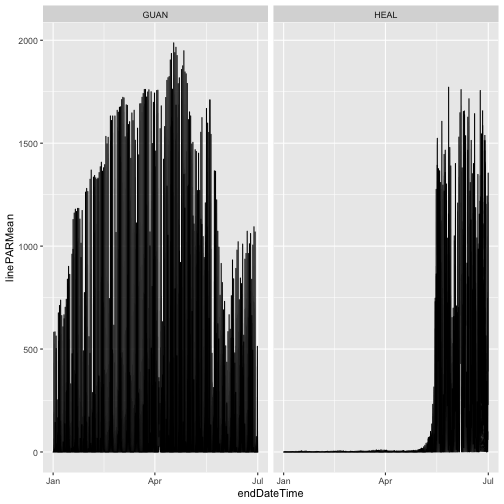

qpr <- loadByProduct(dpID="DP1.00066.001",

site=c("HEAL", "GUAN"),

startdate="2023-01",

enddate="2023-12",

check.size=F)

In the messages output as this function runs, you will see:

Provisional data were excluded from available files list. To download provisional data, use input parameter include.provisional=TRUE.

Just like on the Data Portal, you need to opt in to download Provisional data. We'll do that below. But first, let's take a look at the data we downloaded:

gg <- ggplot(qpr$PARQL_30min,

aes(endDateTime, linePARMean)) +

geom_line() +

facet_wrap(~siteID)

gg

As we can see, the only data present from 2023 are from the first half of the year, the data included in RELEASE-2024. Provisional data from July 2023 onward were omitted.

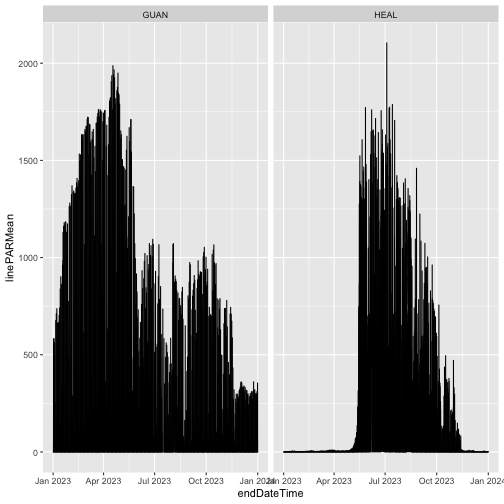

Now let's download the Provisional data as well:

qpr <- loadByProduct(dpID="DP1.00066.001",

site=c("HEAL", "GUAN"),

startdate="2023-01",

enddate="2023-12",

include.provisional=T,

check.size=F)

And now plot the full year of data:

gg <- ggplot(qpr$PARQL_30min,

aes(endDateTime, linePARMean)) +

geom_line() +

facet_wrap(~siteID)

gg

Downloading by Release

To download a specific Release, add the input parameter release=.

Let's download the data from collection year 2021 in RELEASE-2023.

qpr23 <- loadByProduct(dpID="DP1.00066.001",

site=c("HEAL", "GUAN"),

startdate="2021-01",

enddate="2021-12",

release="RELEASE-2023",

check.size=F)

What types of differences might there be in data from different Releases? Let's look at the same data set in RELEASE-2024.

qpr24 <- loadByProduct(dpID="DP1.00066.001",

site=c("HEAL", "GUAN"),

startdate="2021-01",

enddate="2021-12",

release="RELEASE-2024",

check.size=F)

Plot mean PAR from each release. This time we'll only use data from soil plot 001, to simplify the figure. We'll plot RELEASE-2023 in black and RELEASE-2024 in partially transparent blue, to see differences where they're overlaid.

gg <- ggplot(qpr23$PARQL_30min

[which(qpr23$PARQL_30min$horizontalPosition=="001"),],

aes(endDateTime, linePARMean)) +

geom_line() +

facet_wrap(~siteID) +

geom_line(data=qpr24$PARQL_30min

[which(qpr24$PARQL_30min$horizontalPosition=="001"),],

color="blue", alpha=0.3)

gg

The blue and black lines are basically identical; the mean PAR data have not changed between the two releases. We can consult the issue log to see if there are any changes recorded for other variables in the data.

tail(qpr24$issueLog_00066)

## id parentIssueID issueDate resolvedDate dateRangeStart

## <int> <int> <char> <char> <char>

## 1: 45613 NA 2022-01-18T00:00:00Z 2022-01-01T00:00:00Z 2013-01-01T00:00:00Z

## 2: 60104 NA 2022-07-05T00:00:00Z 2022-10-19T00:00:00Z 2021-10-15T00:00:00Z

## 3: 66607 NA 2022-09-12T00:00:00Z 2022-10-31T00:00:00Z 2022-06-12T00:00:00Z

## 4: 78006 NA 2023-03-02T00:00:00Z 2023-11-03T00:00:00Z 2023-03-02T00:00:00Z

## 5: 78310 NA 2023-03-16T00:00:00Z 2023-03-31T00:00:00Z 2014-02-18T00:00:00Z

## 6: 85004 NA 2024-01-04T00:00:00Z 2024-01-04T00:00:00Z 2013-12-01T00:00:00Z

## dateRangeEnd locationAffected

## <char> <char>

## 1: 2021-10-01T00:00:00Z All

## 2: 2022-10-19T00:00:00Z WREF soil plot 3 (HOR.VER: 003.000)

## 3: 2022-10-31T00:00:00Z YELL

## 4: 2023-11-03T00:00:00Z All

## 5: 2023-03-01T00:00:00Z All CPER soil plots (HOR: 001, 002, 003, 004, and 005)

## 6: 2023-12-31T00:00:00Z All terrestrial sites

## issue

## <char>

## 1: Data were reprocessed to incorporate minor and/or isolated corrections to quality control thresholds, sensor installation periods, geolocation data, and manual quality flags.

## 2: Sensor malfunction indicated by positive nighttime radiation

## 3: Severe flooding destroyed several roads into Yellowstone National Park in June 2022, making the YELL and BLDE sites inaccessible to NEON staff. Preventive and corrective maintenance were not able to be performed, nor was the annual exchange of sensors for calibration and validation. While automated quality control routines are likely to detect and flag most issues, users are advised to review data carefully.

## 4: Photosynthetically active radiation (quantum line) measurement height (zOffset) incorrectly shown as 0 m in the sensor positions file in the download package. The actual measurement height is 3+ cm above the soil surface.

## 5: Two different soil plot reference corner records were created for each CPER soil plot, which resulted in two partially different sets of sensor location data being reported in the sensor_positions file. Affected variables were referenceLatitude, referenceLongitude, referenceElevation, eastOffset, northOffset, xAzimuth, and yAzimuth. Other sensor location metadata, including sensor height/depth (zOffset), were unaffected but were still reported twice for each sensor.

## 6: Photosynthetically active radiation (quantum line) (DP1.00066.001) has been reprocessed using NEON’s new instrument processing pipeline. Computation of skewness and kurtosis statistics has been updated in the new pipeline. Previously, these statistics were computed with the Apache Commons Mathematics Library, version 3.6.1, which uses special unbiased formulations and not those described in the Algorithm Theoretical Basis Document (ATBD), which cites the standard formulations. Differences in data values between previous and reprocessed data for the skewness statistic are typically < 1% for 30-min averages and < 10% for 1-min averages. Differences for the kurtosis statistic are much larger, as the previous version reported excess kurtosis which subtracts a value of three so that a normal distribution is equal to zero. Thus, new values for kurtosis are typically greater by 3 ± 1% for 30-min averages and 3 ± 10% for 1-min averages.

## resolution

## <char>

## 1: Reprocessed provisional data are available now. Reprocessed data previously included in RELEASE-2021 will become available when RELEASE-2022 is issued.

## 2: Data flagged and sensor cable replaced.

## 3: Normal operations resumed on October 31, 2022, when the National Park Service opened a newly constructed road from Gardiner, MT to Mammoth, WY with minimal restrictions. For more details about data impacts, see Data Notification https://www.neonscience.org/impact/observatory-blog/data-impacts-neons-yellowstone-sites-yell-blde-due-catastrophic-flooding-0

## 4: Measurement heights have been added and data republication has been requested, which should be completed within a few weeks. Heights will be available from July 2022 onwards once data republication is complete and will be available for all data once RELEASE-2024 data are published (expected January 2024). The issue will persist in RELEASE-2023 data and prior data releases.

## 5: The erroneous reference corner record was deleted and data were scheduled for republication. This issue will persist in data from June 2022 and earlier until the RELEASE-2024 data is published (approximately January 2024). It will also persist in RELEASE-2023 and earlier data releases.

## 6: All provisional data have been updated, and data for all time and all sites will be updated in RELEASE-2024 (expected to be issued in late January, 2024).

The final issue noted in the table was reported and resolved in January 2024. It tells us that the data were reprocessed for the 2024 Release, and the algorithms for the skewness and kurtosis statistics were updated. Let's take a look at the kurtosis statistics from the two Releases.

gg <- ggplot(qpr23$PARQL_30min

[which(qpr23$PARQL_30min$horizontalPosition=="001"),],

aes(endDateTime, linePARKurtosis)) +

geom_line() +

facet_wrap(~siteID) +

geom_line(data=qpr24$PARQL_30min

[which(qpr24$PARQL_30min$horizontalPosition=="001"),],

color="blue", alpha=0.3)

gg

Here, we can see the kurtosis values have shifted slightly higher in RELEASE-2024, relative to their values in RELEASE-2023. This is a metric of the distribution of PAR observations within the averaging interval; if this aspect of variability is important for your analysis, you would now be able to incorporate these improved estimates into your work.

Data citation

We saw above that citation information is available on the data product detail

pages on the Data Portal. The neonUtilities package functions also provide

citations in BibTeX.

Provisional:

writeLines(qpr$citation_00066_PROVISIONAL)

## @misc{DP1.00066.001/provisional,

## doi = {},

## url = {https://data.neonscience.org/data-products/DP1.00066.001},

## author = {{National Ecological Observatory Network (NEON)}},

## language = {en},

## title = {Photosynthetically active radiation (quantum line) (DP1.00066.001)},

## publisher = {National Ecological Observatory Network (NEON)},

## year = {2024}

## }

RELEASE-2024:

writeLines(qpr$`citation_00066_RELEASE-2024`)

## @misc{https://doi.org/10.48443/8r8b-0789,

## doi = {10.48443/8R8B-0789},

## url = {https://data.neonscience.org/data-products/DP1.00066.001/RELEASE-2024},

## author = {{National Ecological Observatory Network (NEON)}},

## keywords = {solar radiation, soil surface radiation, photosynthetically active radiation (PAR), photosynthetic photon flux density (PPFD), quantum line sensor},

## language = {en},

## title = {Photosynthetically active radiation (quantum line) (DP1.00066.001)},

## publisher = {National Ecological Observatory Network (NEON)},

## year = {2024},

## copyright = {Creative Commons Zero v1.0 Universal}

## }

These can be adapted as needed for other formatting conventions.

Data management

Within the neonUtilities package, some functions download data, some

perform data wrangling on data you've already downloaded, and some do

both. Different approaches are practical for Released and Provisional

data.

Since data in a Release never change, there's no need to download a Release multiple times. On the other hand, if you're working with Provisional data, you may want to re-download each time you work on an analysis, to ensure you're always working with the most up-to-date data.

The neonUtilities cheat sheet includes an overview

of the operations carried out by each function, for reference.

Here, we'll outline some suggested workflows for data management, organized by NEON measurement system.

Sensor or observational (IS/OS)

Most people working with NEON's tabular data (OS and IS) use the

loadByProduct() function to download and stack data files. It

downloads data from the NEON API every time you run it. When

working with data in a Release, it can be convenient to save the

downloaded data as an R object and re-load it the next time you

work on your analysis, rather than downloading again.

tick <- loadByProduct(dpID="DP1.10093.001",

site=c("GUAN"),

release="RELEASE-2024",

check.size=F)

saveRDS(tick, paste0(getwd(), "/NEON_tick_data.rds"))

And the next time you start work:

tick <- readRDS(paste0(getwd(), "/NEON_tick_data.rds"))

When working with Provisional data, you can run

loadByProduct() every time to get the most recent data, but

be sure to save and archive the final version you use in a

publication, for citation and reproducibility. Guidelines for

archiving a dataset can be found on the

Publishing Research Outputs webpage.

Eddy covariance or atmospheric isotopes (SAE)

Because SAE files are so large, stackEddy() is designed to extract

the desired variables from locally stored files, and it works the

same way on files downloaded from the Data Portal or by

zipsByProduct(). You can download once, using your preferred method,

and then use stackEddy() every time you need to access any of the

file contents.

zipsByProduct(dpID="DP4.00200.001",

site=c("TEAK"),

startdate="2023-05",

enddate="2023-06",

release="RELEASE-2024",

savepath=getwd(),

check.size=F)

flux <- stackEddy(paste0(getwd(), "/filesToStack00200"),

level="dp04")

The next time you need to work with these data, you can skip the

zipsByProduct() line and go straight to stackEddy(). And if you

come back later and decide you want to work with the isotope data

instead of the flux data, still no need to re-download:

iso <- stackEddy(paste0(getwd(), "/filesToStack00200"),

level="dp01", var="isoCo2", avg=6)

If you're working with Provisional SAE data, you may be thinking you'll need to re-download regularly. But SAE data are rarely re-processed outside of the annual release schedule, due to the large computational demands of SAE processing. Each month, you can download newly published Provisional data that were collected the previous month, but you won't need to re-download older months.

Remote sensing (AOP)

Data Releases are handled a bit differently for AOP than the other data systems. Due to the very large volume of data, past Releases of AOP data are not available for download. Only the most recent Release and Provisional data can be downloaded at any given time.

DOIs for past Releases of AOP data remain available, and can be used to cite the data in perpetuity. Their DOI status is set to "tombstone", the term used to denote a dataset that is citable but no longer accessible.

See the Large Data Packages section on the Publishing Research Outputs page for suggestions about archiving large datasets.

Get Lesson Code

Understanding AOP Data Releases and Best Practices for AOP Data Management

Authors: Bridget Hass

Last Updated: Dec 10, 2025

What is a NEON Data Release?

A NEON Data Release is a fixed set of data that does not change over time. Each data product in a Release is associated with a unique Digital Object Identifier (DOI) that can be used for data citation. Because the data in a Release do not change, analyses performed on those data are traceable and reproducible.

NEON data are initially published under a Provisional status, meaning that data may be updated on an as-needed basis, without guarantee of reproducibility. Publishing Provisional data allows NEON to publish data rapidly while retaining the ability to make corrections or additions as the need is identified. Most of the time, unless issues are discovered in the Provisional data, the Provisional data will become Released - so Provisional data are still valid to use. If any issues are identified, they will be included in the data product issue logs.

For more details about NEON Data Releases, see the Understanding Releases and Provisional Data tutorial and the Data Product Revisions and Releases web page.

How are AOP Releases different than the other NEON subsystems (IS/OS)?

Unlike NEON's other systems (Instrumented/IS and Observational/OS), AOP (Remote Sensing) does not preserve historical versions of data. Most AOP data products are high volume; thus it is expensive to store and make openly and freely available more than the most recent version. Therefore, NEON's annual releases for AOP data products are only available for the current release year – from the date of release to approximately 11 months later when the AOP team begins preparing for the next year’s release. For example, Release-2023 versions of AOP data products were available from January 27, 2023 until December 20, 2023.

DOIs for AOP data products for a given RELEASE will be tombstoned prior to each subsequent Release. These tombstoned data can be thought of as being "out of print": the DOI for each data product release is still valid, but the version of the data that the DOI referred to is no longer available for download. A DOI that has been tombstoned will resolve to the data product release's webpage which explains that the released version of the product is no longer available for download (e.g. Ecosystem structure RELEASE-2023, also shown in the figure below).

Prior to each annual NEON Release, AOP scientists review the existing data and reprocess data if any issues are identified. Then they begin an approximately month-long transition period of replacing older files with newer ones. During this period, the current data release tag may no longer point to the same exact files for certain AOP data products that are undergoing updates. Although the data portal or API may indicate availability of a data product at specific sites for specific months, some files may be unavailable for few days (or longer) before being replaced by updated versions.

NEON will publish a Data Notification indicating when AOP is transitioning between one Release and the next (e.g., AOP Data Availability Notification – Release 2024). We suggest holding off on downloading AOP data during this interim period if it is not urgent, or submitting an inquiry through the NEON Contact Us Form to obtain information about the status of the data products, sites, and years that you are interested in.

How can I tell if the AOP data I've downloaded is up to date?

There are a few ways to check if and what AOP data have been updated since you last downloaded the data, both on the website, and programmatically using functionality built into the AOP download functions. On the website, you can check the Issue Log tables on each data product page and also see the Issue resolutions, and you can also check the Release pages for a complete summary of what has been changed between the past release and the current one; for example Release 2025. This tutorial will demonstrate some of the programmatic options to check if published AOP data has been modified compared to your local version.

Objectives

After completing this activity, you will be able to:

- Find available Released and Provisional AOP data for a given site and data product

- Understand options for downloading AOP data

- Display citation information for both Released and Provisional data

- Learn about available tools to reduce large data downloads

- Understand some basic best practices for working with large volumes of AOP data

Things You’ll Need To Complete This Tutorial

To complete this tutorial, you will need a version of Python (3.9 or higher), the latest Python neonutilities package (1.2 or higher) and, preferably, Jupyter Notebooks or Spyder installed on your computer. Much of the lesson can also be carried out in R using the R neonUtilities package; however some of the functionality that is demonstrated is currently only available in Python.

Additional Resources

Import Required Packages

For this tutorial, we will mainly be exploring the functions imported below in the neonutilities package that allow us to explore availability of and download AOP data.

import csv

import os

from neonutilities import by_file_aop, list_available_dates, get_citation

First, we can use some the list_available_dates function to find available data. This will show us the data that is available both provisionally and as part of the latest release. First, run help(list_available_dates) to see the required inputs of this function.

help(list_available_dates)

Help on function list_available_dates in module neonutilities.aop_download:

list_available_dates(dpid, site)

list_available_dates displays the available releases and dates for a given product and site

--------

Inputs:

dpid: the data product code (eg. 'DP3.30015.001' - CHM)

site: the 4-digit NEON site code (eg. 'JORN')

--------

Returns:

prints the Release Tag (or PROVISIONAL) and the corresponding available dates (YYYY-MM) for each tag

--------

Usage:

--------

>>> list_available_dates('DP3.30015.001','JORN')

RELEASE-2025 Available Dates: 2017-08, 2018-08, 2019-08, 2021-08, 2022-09

>>> list_available_dates('DP3.30015.001','HOPB')

PROVISIONAL Available Dates: 2024-09

RELEASE-2025 Available Dates: 2016-08, 2017-08, 2019-08, 2022-08

>>> list_available_dates('DP1.10098.001','HOPB')

ValueError: There are no data available for the data product DP1.10098.001 at the site HOPB.

The required inputs for this function are the data product id (dpid) and the site code (site). Let's try this out for the Canopy Height Model (Ecosystem Structure) data product at the McRae Creek site in Oregon (MCRA), to start. The CHM data product has the code DP3.30015.001.

list_available_dates('DP3.30015.001','MCRA')

PROVISIONAL Available Dates: 2025-08

RELEASE-2025 Available Dates: 2018-07, 2021-07, 2022-07, 2023-07

We can see that as of Dec 2025, CHM data at MCRA are available provisionally in 2025, and as part of RELEASE-2025 for the years 2018, 2021, 2022, and 2023. MCRA is a non-collocated aquatic site and is collected on an opportunistic basis, so it has been flown a little less frequently than the terrestrial or collocated aquatic sites.

We can download the CHM data from the site MCRA collected in 2023 and 2025 using the neonutilities function by_file_aop. Note that by_file_aop downloads all available data for a given data product, while by_tile_aop downloads only data that intersect provided UTM coordinates (easting and northing). For the purposes of this tutorial, we will stick to using by_file_aop, but note that you could use by_tile_aop similarly, provided you know which tiles you want to download. First, take a quick look at the function documentation using help.

help(by_file_aop)

Help on function by_file_aop in module neonutilities.aop_download:

by_file_aop(dpid, site, year, include_provisional=False, check_size=True, savepath=None, chunk_size=1024, token=None, verbose=False, skip_if_exists=False, overwrite='prompt')

This function queries the NEON API for AOP data by site, year, and product, and downloads all

files found, preserving the original folder structure. It downloads files serially to

avoid API rate-limit overload, which may take a long time.

Parameters

--------

dpid: str

The identifier of the NEON data product to pull, in the form DPL.PRNUM.REV, e.g. DP3.30001.001.

site: str

The four-letter code of a single NEON site, e.g. 'CLBJ'.

year: str or int

The four-digit year of data collection.

include_provisional: bool, optional

Should provisional data be downloaded? Defaults to False. See

https://www.neonscience.org/data-samples/data-management/data-revisions-releases

for details on the difference between provisional and released data.

check_size: bool, optional

Should the user approve the total file size before downloading? Defaults to True.

If you have sufficient storage space on your local drive, when working

in batch mode, or other non-interactive workflow, use check_size=False.

savepath: str, optional

The file path to download to. Defaults to None, in which case the working directory is used.

chunk_size: integer, optional

Size in bytes of chunk for chunked download. Defaults to 1024.

token: str, optional

User-specific API token from data.neonscience.org user account. Defaults to None.

See https://data.neonscience.org/data-api/rate-limiting/ for details about API

rate limits and user tokens.

verbose: bool, optional

If set to True, the function will print more detailed information about the download process.

skip_if_exists: bool, optional

If set to True, the function will skip downloading files that already exist in the

savepath and are valid (local checksums match the checksums of the published file).

Defaults to False. If any local file checksums don't match those of files published

on the NEON Data Portal, the user will be prompted to skip these files or overwrite

the existing files with the new ones (see overwrite input).

overwrite: str, optional

Must be one of:

'yes' - overwrite mismatched files without prompting,

'no' - don't overwrite mismatched files (skip them, no prompt),

'prompt' - prompt the user (y/n) to overwrite mismatched files after displaying them (default).

If skip_if_exists is False, this parameter is ignored, and any existing files in

the savepath will be overwritten according to the function's default behavior.

Returns

--------

None; data are downloaded to the directory specified (savepath) or the current working directory.

If data already exist in the expected path, they will be overwritten by default. To check for

existing files before downloading, set skip_if_exists=True along with an overwrite option (y/n/prompt).

Examples

--------

>>> by_file_aop(dpid="DP3.30015.001",

site="MCRA",

year="2021",

savepath="./test_download",

skip_if_exists=True)

# This downloads the 2021 Canopy Height Model data from McRae Creek to the './test_download' directory.

# If any files already exist in the savepath, they will be checked and skipped if they are valid.

# The user will be prompted to ovewrite or skip downloading any existing files that do not match

# the latest published data on the NEON Data Portal.

Notes

--------

This function creates a folder named by the Data Product ID (DPID; e.g. DP3.30015.001) in the

'savepath' directory, containing all AOP files meeting the query criteria. If 'savepath' is

not provided, data are downloaded to the working directory, in a folder named by the DPID.

The required inputs for this function are the data product id (dpid), site id (site) and year (year). Note that there are a number of additional optional inputs, which we will explain more in detail. Some ones that we recommend paying attention to are:

-

token: Your API token from your NEON user account (on data.neonscience.org). Currently defaults to None, but we highly encourage using a token when downloading NEON data. See Using an API Token when Accessing NEON Data with neonUtilities for more details on the benefits of tokens and how to use them. -

include_provisional: setting this toTrueis the only way you can download provisional data. If you've used thelist_available_datesfunction and you want to download data from a year where it is only available provisionally, you must set this option to True in order to download any data. You will see a warning if no data are found. We will show this in the example below. -

savepath: this is the path where data will be downloaded. We recommend setting this to somewhere close to the root folder to avoid very long paths for AOP data. Windows systems can have path length limitations (unless you change them) and if the path is too long you may see a warning or error.

In the most recent version of Python neonutilities (1.2.0, released in October 2025), there are two new optional inputs to the by_file_aop and by_tile_aop functions: skip_if_exists and overwrite. We will go over these options in more detail towards the end of this tutorial. For now, just know that these options provide functionality to avoid re-downloading identical data.

Set the data directory, where data will be downloaded. Make sure this is a path that works on your system and has sufficient space for downloading data.

data_dir = r'C:\NEON_Data'

by_file_aop(dpid='DP3.30015.001',

site='MCRA',

year='2025',

savepath=data_dir)

Provisional NEON data are not included. To download provisional data, use input parameter include_provisional=True.

No NEON data files found. Available data may all be provisional. To download provisional data, use input parameter include_provisional=True.

Now let's set include_provisional=True to see what happens. Select "y" when prompted to download the data (first ensuring you have enough space).

by_file_aop(dpid='DP3.30015.001',

site='MCRA',

year='2025',

savepath=data_dir,

include_provisional=True)

Provisional NEON data are included. To exclude provisional data, use input parameter include_provisional=False.

Continuing will download 32 NEON data files totaling approximately 342.5 MB. Do you want to proceed? (y/n) y

Downloading 32 NEON data files totaling approximately 342.5 MB

100%|██████████████████████████████████████████████████████████████████████████████████| 32/32 [00:42<00:00, 1.32s/it]

You can also optionally set check_size=False if you don't want to be prompted to type yes or no (y/n) after the data volume is displayed. If this is your first time downloading data, we recommend keeping the default setting so you can make sure you have enough space on your computer before downloading.

Ok, simple enough! Let's take a look at the data we have downloaded. We'll write a couple of functions to let us see the folders and some of the files that have been downloaded. Feel free to explore the directory in File Explorer on your own as well.

def get_folders_with_files(path):

"""

Returns a list of folders within the given path that contain files,

excluding folders that only contain subfolders or are empty.

Args:

path (str): The path to the directory to search.

Returns:

list: A list of full paths to folders containing files.

"""

folders_with_files = []

for root, dirs, files in os.walk(path):

# Check if the current 'root' directory contains any files

if files:

folders_with_files.append(root)

return folders_with_files

def display_files_in_subdirectories(path):

"""

Displays files within a given directory and its subdirectories.

Only the first 5 files are shown in each directory if more are present.

Args:

path (str): The path to the root directory to start scanning from.

"""

# if not os.path.isdir(path):

# print(f"Error: '{path}' is not a valid directory.")

# return

for dirpath, dirnames, filenames in os.walk(path):

if filenames:

print(f"\nDirectory: {dirpath}")

# Sort filenames for consistent output, then take the first 5

sorted_filenames = sorted(filenames)

files_to_display = sorted_filenames[:5]

for file in files_to_display:

print(f" - {file}")

if len(sorted_filenames) > 5:

print(f" ... ({len(sorted_filenames) - 5} more files not shown)")

Use these functions to see what we've downloaded:

get_folders_with_files(data_dir)

['C:\\NEON_Data\\DP3.30015.001',

'C:\\NEON_Data\\DP3.30015.001\\neon-aop-provisional-products\\2025\\FullSite\\D16\\2025_MCRA_5\\L3\\DiscreteLidar\\CanopyHeightModelGtif',

'C:\\NEON_Data\\DP3.30015.001\\neon-aop-provisional-products\\2025\\FullSite\\D16\\2025_MCRA_5\\Metadata\\DiscreteLidar\\Reports',

'C:\\NEON_Data\\DP3.30015.001\\neon-aop-provisional-products\\2025\\FullSite\\D16\\2025_MCRA_5\\Metadata\\DiscreteLidar\\TileBoundary',

'C:\\NEON_Data\\DP3.30015.001\\neon-publication\\NEON.DOM.SITE.DP3.30015.001\\MCRA\\20250801T000000--20250901T000000\\basic']

display_files_in_subdirectories(data_dir)

Directory: C:\NEON_Data\DP3.30015.001

- citation_DP3.30015.001_PROVISIONAL.txt

- issueLog_DP3.30015.001.csv

Directory: C:\NEON_Data\DP3.30015.001\neon-aop-provisional-products\2025\FullSite\D16\2025_MCRA_5\L3\DiscreteLidar\CanopyHeightModelGtif

- NEON_D16_MCRA_DP3_565000_4900000_CHM.tif

- NEON_D16_MCRA_DP3_565000_4901000_CHM.tif

- NEON_D16_MCRA_DP3_565000_4902000_CHM.tif

- NEON_D16_MCRA_DP3_565000_4903000_CHM.tif

- NEON_D16_MCRA_DP3_565000_4904000_CHM.tif

... (22 more files not shown)

Directory: C:\NEON_Data\DP3.30015.001\neon-aop-provisional-products\2025\FullSite\D16\2025_MCRA_5\Metadata\DiscreteLidar\Reports

- 2025080216_P3C1_SBET_QAQC.pdf

- 2025_MCRA_5_L1_discrete_lidar_qa.html

- 2025_MCRA_5_L3_discrete_lidar_qa.html

Directory: C:\NEON_Data\DP3.30015.001\neon-aop-provisional-products\2025\FullSite\D16\2025_MCRA_5\Metadata\DiscreteLidar\TileBoundary

- 2025_MCRA_5_TileBoundary.zip

Directory: C:\NEON_Data\DP3.30015.001\neon-publication\NEON.DOM.SITE.DP3.30015.001\MCRA\20250801T000000--20250901T000000\basic

- NEON.D16.MCRA.DP3.30015.001.readme.20250926T050145Z.txt

We can see that we've downloaded some files in subfolders under the C:\NEON_Data\DP3.30015.001 folder. The provisional data are stored in a provisional bucket (or cloud storage path) called neon-aop-provisional-products and the full path of the data as it is stored on cloud storage is preserved in order to maintain organization. This is helpful if you are working with multiple data products, sites, and/or years of data. Note that there is an L3\DiscreteLidar\CanopyHeightModelGtiffolder - this contains the Level 3 (L3) geotiff files (or .tif tiles), and there is also a Metadata\DiscreteLidar folder, which contains the subfolders called Reports and TileBoundary. The reports are informational documents (in pdf or html format) summarizing the processing parameters and useful quality information. The TileBoundary folder contains shapefiles and kml files that provide useful information about the extent of the data. Please explore the data more on your own!

Before continuing, let's take a quick look at the files citation_DP3.30015.001_PROVISIONAL.txt and issueLog_DP3.30015.001.csv that were downloaded in the C:\NEON_Data\DP3.30015.001 folder.

with open(r'C:\NEON_Data\DP3.30015.001\citation_DP3.30015.001_PROVISIONAL.txt', 'r', newline='') as file:

reader = csv.reader(file)

for row in reader:

print(row[0])

@misc{DP3.30015.001/provisional

doi = {}

url = {https://data.neonscience.org/data-products/DP3.30015.001}

author = {{National Ecological Observatory Network (NEON)}}

language = {en}

title = {Ecosystem structure (DP3.30015.001)}

publisher = {National Ecological Observatory Network (NEON)}

year = {2025}

}