Series

Introduction to Hierarchical Data Format (HDF5) - Using HDFView and R

This series goes through what the HDF5 format is and how to open, read, and create HDF5 files in R. We also cover extracting and plotting data from HDF5 files.

Data used in this series are from the National Ecological Observatory Network (NEON) and are in HDF5 format.

Series Objectives

After completing the series you will:

- Understand how data can be structured and stored in HDF5

- Understand how metadata can be added to an HDF5 file

- Know how to explore HDF5 files using HDFView, a free tool for viewing HDF4 and HDF5 files

- Know how to work with HDF5 files in R

- Know how to work with time-series data within a nested HDF5 file

Things You’ll Need To Complete This Series

You will need the most current version of R and, preferably, RStudio loaded on your computer to complete this tutorial.

R is a programming language that specializes in statistical computing. It is a powerful tool for exploratory data analysis. To interact with R, we strongly recommend using RStudio, an interactive development environment (IDE).

Download Data

Data is available for download in those tutorials that focus on teaching data skills.

Hierarchical Data Formats - What is HDF5?

Authors: Leah A. Wasser

Last Updated: Apr 10, 2025

Learning Objectives

After completing this tutorial, you will be able to:

- Explain what the Hierarchical Data Format (HDF5) is.

- Describe the key benefits of the HDF5 format, particularly related to big data.

- Describe both the types of data that can be stored in HDF5 and how it can be stored/structured.

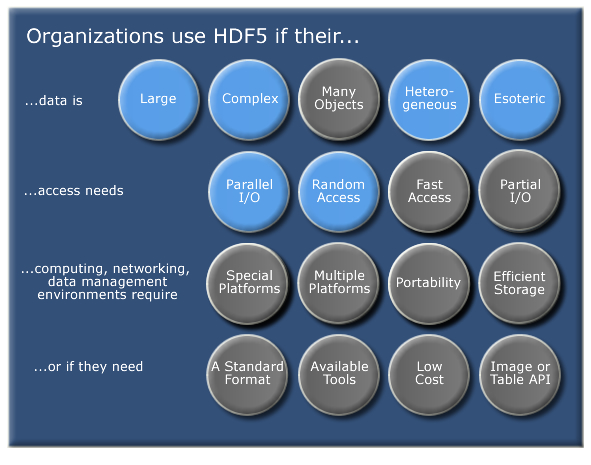

About Hierarchical Data Formats - HDF5

The Hierarchical Data Format version 5 (HDF5), is an open source file format that supports large, complex, heterogeneous data. HDF5 uses a "file directory" like structure that allows you to organize data within the file in many different structured ways, as you might do with files on your computer. The HDF5 format also allows for embedding of metadata making it self-describing.

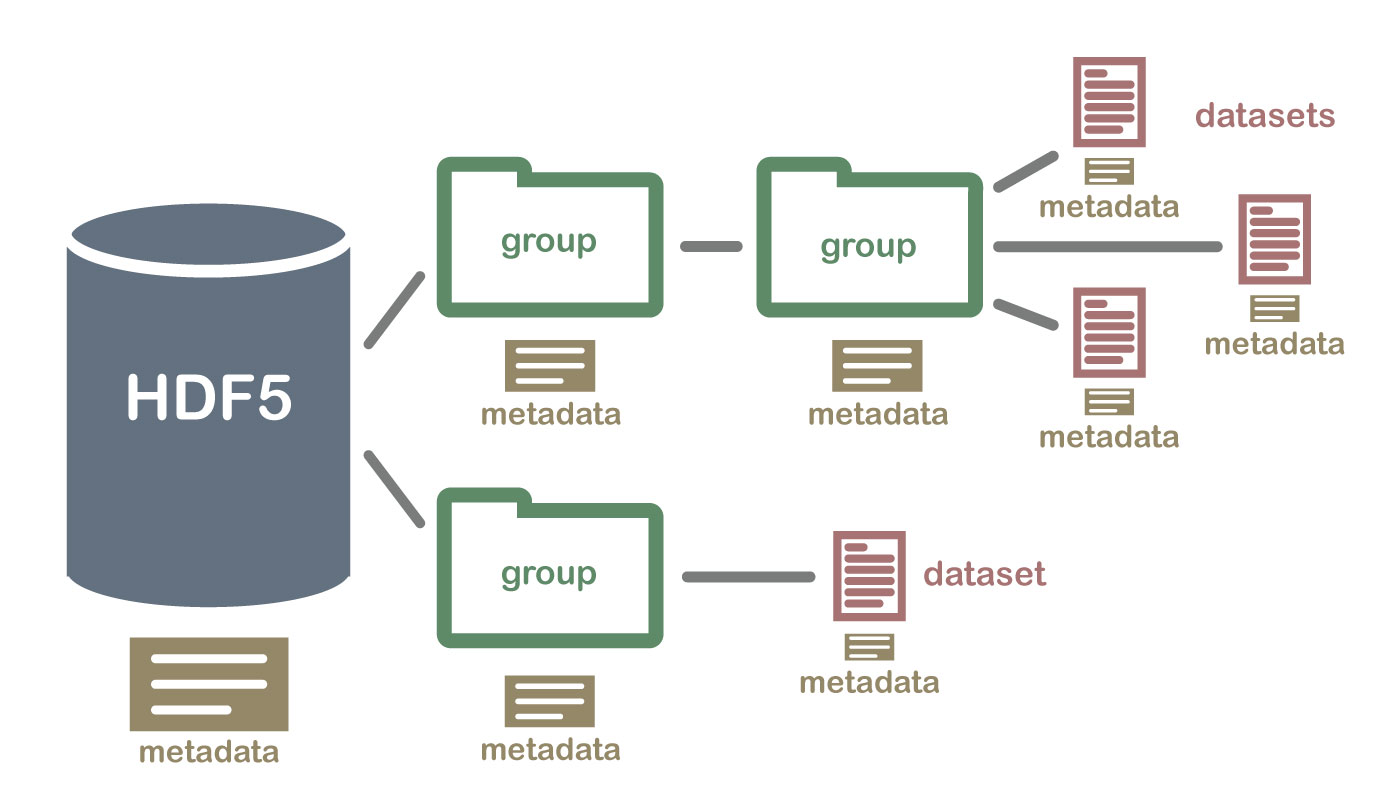

Hierarchical Structure - A file directory within a file

The HDF5 format can be thought of as a file system contained and described

within one single file. Think about the files and folders stored on your computer.

You might have a data directory with some temperature data for multiple field

sites. These temperature data are collected every minute and summarized on an

hourly, daily and weekly basis. Within one HDF5 file, you can store a similar

set of data organized in the same way that you might organize files and folders

on your computer. However in a HDF5 file, what we call "directories" or "folders"

on our computers, are called groups and what we call files on our

computer are called datasets.

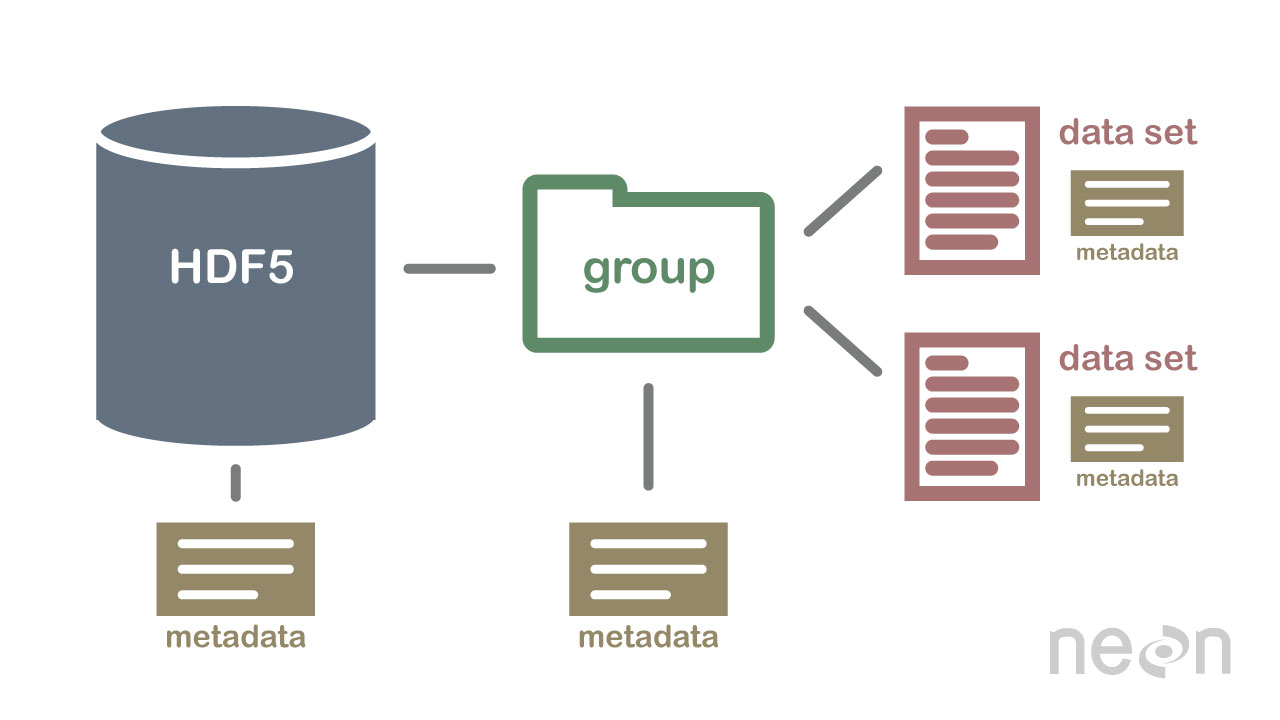

2 Important HDF5 Terms

- Group: A folder like element within an HDF5 file that might contain other groups OR datasets within it.

- Dataset: The actual data contained within the HDF5 file. Datasets are often (but don't have to be) stored within groups in the file.

An HDF5 file containing datasets, might be structured like this:

HDF5 is a Self Describing Format

HDF5 format is self describing. This means that each file, group and dataset can have associated metadata that describes exactly what the data are. Following the example above, we can embed information about each site to the file, such as:

- The full name and X,Y location of the site

- Description of the site.

- Any documentation of interest.

Similarly, we might add information about how the data in the dataset were collected, such as descriptions of the sensor used to collect the temperature data. We can also attach information, to each dataset within the site group, about how the averaging was performed and over what time period data are available.

One key benefit of having metadata that are attached to each file, group and

dataset, is that this facilitates automation without the need for a separate

(and additional) metadata document. Using a programming language, like R or

Python, we can grab information from the metadata that are already associated

with the dataset, and which we might need to process the dataset.

Compressed & Efficient subsetting

The HDF5 format is a compressed format. The size of all data contained within

HDF5 is optimized which makes the overall file size smaller. Even when

compressed, however, HDF5 files often contain big data and can thus still be

quite large. A powerful attribute of HDF5 is data slicing, by which a

particular subsets of a dataset can be extracted for processing. This means that

the entire dataset doesn't have to be read into memory (RAM); very helpful in

allowing us to more efficiently work with very large (gigabytes or more) datasets!

Heterogeneous Data Storage

HDF5 files can store many different types of data within in the same file. For example, one group may contain a set of datasets to contain integer (numeric) and text (string) data. Or, one dataset can contain heterogeneous data types (e.g., both text and numeric data in one dataset). This means that HDF5 can store any of the following (and more) in one file:

- Temperature, precipitation and PAR (photosynthetic active radiation) data for a site or for many sites

- A set of images that cover one or more areas (each image can have specific spatial information associated with it - all in the same file)

- A multi or hyperspectral spatial dataset that contains hundreds of bands.

- Field data for several sites characterizing insects, mammals, vegetation and meteorology.

- A set of images that cover one or more areas (each image can have unique spatial information associated with it)

- And much more!

Open Format

The HDF5 format is open and free to use. The supporting libraries (and a free

viewer), can be downloaded from the

HDF Group

website. As such, HDF5 is widely supported in a host of programs, including

open source programming languages like R and Python, and commercial

programming tools like Matlab and IDL. Spatial data that are stored in HDF5

format can be used in GIS and imaging programs including QGIS, ArcGIS, and

ENVI.

Summary Points - Benefits of HDF5

- Self-Describing The datasets with an HDF5 file are self describing. This allows us to efficiently extract metadata without needing an additional metadata document.

- Supporta Heterogeneous Data: Different types of datasets can be contained within one HDF5 file.

- Supports Large, Complex Data: HDF5 is a compressed format that is designed to support large, heterogeneous, and complex datasets.

- Supports Data Slicing: "Data slicing", or extracting portions of the dataset as needed for analysis, means large files don't need to be completely read into the computers memory or RAM.

-

Open Format - wide support in the many tools: Because the HDF5 format is

open, it is supported by a host of programming languages and tools, including

open source languages like R and

Pythonand open GIS tools likeQGIS.

HDFView: Exploring HDF5 Files in the Free HDFview Tool

Authors: Leah A. Wasser

Last Updated: Apr 8, 2021

In this tutorial you will use the free HDFView tool to explore HDF5 files and the groups and datasets contained within. You will also see how HDF5 files can be structured and explore metadata using both spatial and temporal data stored in HDF5!

Learning Objectives

After completing this activity, you will be able to:

- Explain how data can be structured and stored in HDF5.

- Navigate to metadata in an HDF5 file, making it "self describing".

- Explore HDF5 files using the free HDFView application.

Tools You Will Need

Install the free HDFView application. This application allows you to explore the contents of an HDF5 file easily. Click here to go to the download page.

Data to Download

NOTE: The first file downloaded has an .HDF5 file extension, the second file downloaded below has an .h5 extension. Both extensions represent the HDF5 data type.

NEON Teaching Data Subset: Sample Tower Temperature - HDF5

These temperature data were collected by the National Ecological Observatory Network's flux towers at field sites across the US. The entire dataset can be accessed by request from the NEON Data Portal.

Download DatasetDownload NEON Teaching Data Subset: Imaging Spectrometer Data - HDF5

These hyperspectral remote sensing data provide information on the National Ecological Observatory Network's San Joaquin Exerimental Range field site. The data were collected over the San Joaquin field site located in California (Domain 17) and processed at NEON headquarters. The entire dataset can be accessed by request from the NEON Data Portal.

Download DatasetInstalling HDFView

Select the HDFView download option that matches the operating system (Mac OS X, Windows, or Linux) and computer setup (32 bit vs 64 bit) that you have.

This tutorial was written with graphics from the VS 2012 version, but it is applicable to other versions as well.

Hierarchical Data Format 5 - HDF5

Hierarchical Data Format version 5 (HDF5), is an open file format that supports large, complex, heterogeneous data. Some key points about HDF5:

- HDF5 uses a "file directory" like structure.

- The HDF5 data models organizes information using

Groups. Each group may contain one or moredatasets. - HDF5 is a self describing file format. This means that the metadata for the data contained within the HDF5 file, are built into the file itself.

- One HDF5 file may contain several heterogeneous data types (e.g. images, numeric data, data stored as strings).

For more introduction to the HDF5 format, see our About Hierarchical Data Formats - What is HDF5? tutorial.

In this tutorial, we will explore two different types of data saved in HDF5. This will allow us to better understand how one file can store multiple different types of data, in different ways.

Part 1: Exploring Temperature Data in HDF5 Format in HDFView

The first thing that we will do is open an HDF5 file in the viewer to get a better idea of how HDF5 files can be structured.

Open a HDF5/H5 file in HDFView

To begin, open the HDFView application.

Within the HDFView application, select File --> Open and navigate to the folder

where you saved the NEONDSTowerTemperatureData.hdf5 file on your computer. Open this file in HDFView.

If you click on the name of the HDF5 file in the left hand window of HDFView, you can view metadata for the file. This will be located in the bottom window of the application.

Explore File Structure in HDFView

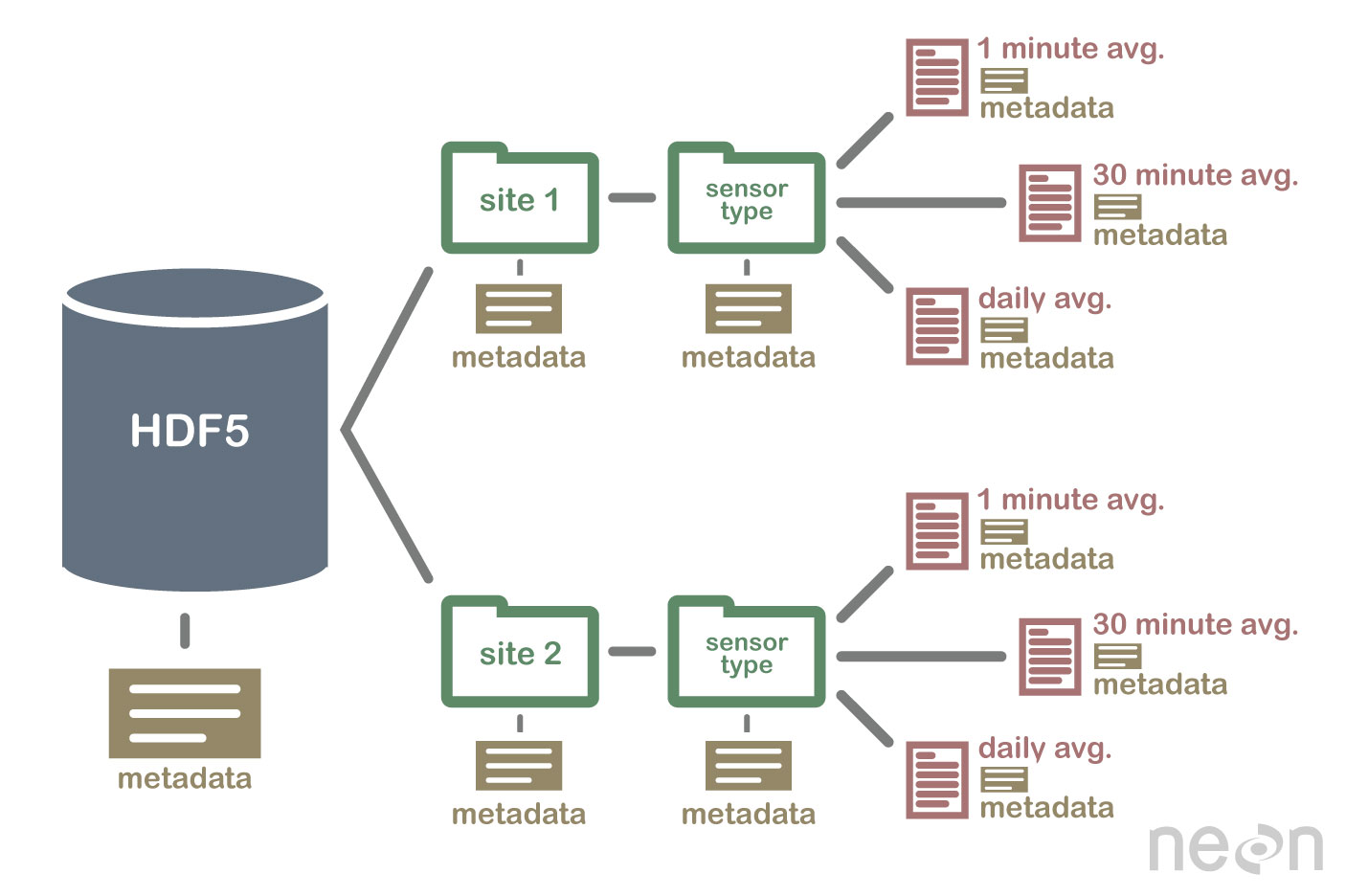

Next, explore the structure of this file. Notice that there are two Groups (represented as folder icons in the viewer) called "Domain_03" and "Domain_10". Within each domain group, there are site groups (NEON sites that are located within those domains). Expand these folders by double clicking on the folder icons. Double clicking expands the groups content just as you might expand a folder in Windows explorer.

Notice that there is metadata associated with each group.

Double click on the OSBS group located within the Domain_03 group. Notice in

the metadata window that OSBS contains data collected from the

NEON Ordway-Swisher Biological Station field site.

Within the OSBS group there are two more groups - Min_1 and Min_30. What data

are contained within these groups?

Expand the "min_1" group within the OSBS site in Domain_03. Notice that there are five more nested groups named "Boom_1, 2, etc". A boom refers to an arm on a tower, which sits at a particular height and to which are attached sensors for collecting data on such variables as temperature, wind speed, precipitation, etc. In this case, we are working with data collected using temperature sensors, mounted on the tower booms.

Speaking of temperature - what type of sensor is collected the data within the boom_1 folder at the Ordway Swisher site? HINT: check the metadata for that dataset.

Expand the "Boom_1" folder by double clicking it. Finally, we have arrived at a dataset! Have a look at the metadata associated with the temperature dataset within the boom_1 group. Notice that there is metadata describing each attribute in the temperature dataset. Double click on the group name to open up the table in a tabular format. Notice that these data are temporal.

So this is one example of how an HDF5 file could be structured. This particular file contains data from multiple sites, collected from different sensors (mounted on different booms on the tower) and collected over time. Take some time to explore this HDF5 dataset within the HDFViewer.

Part 2: Exploring Hyperspectral Imagery stored in HDF5

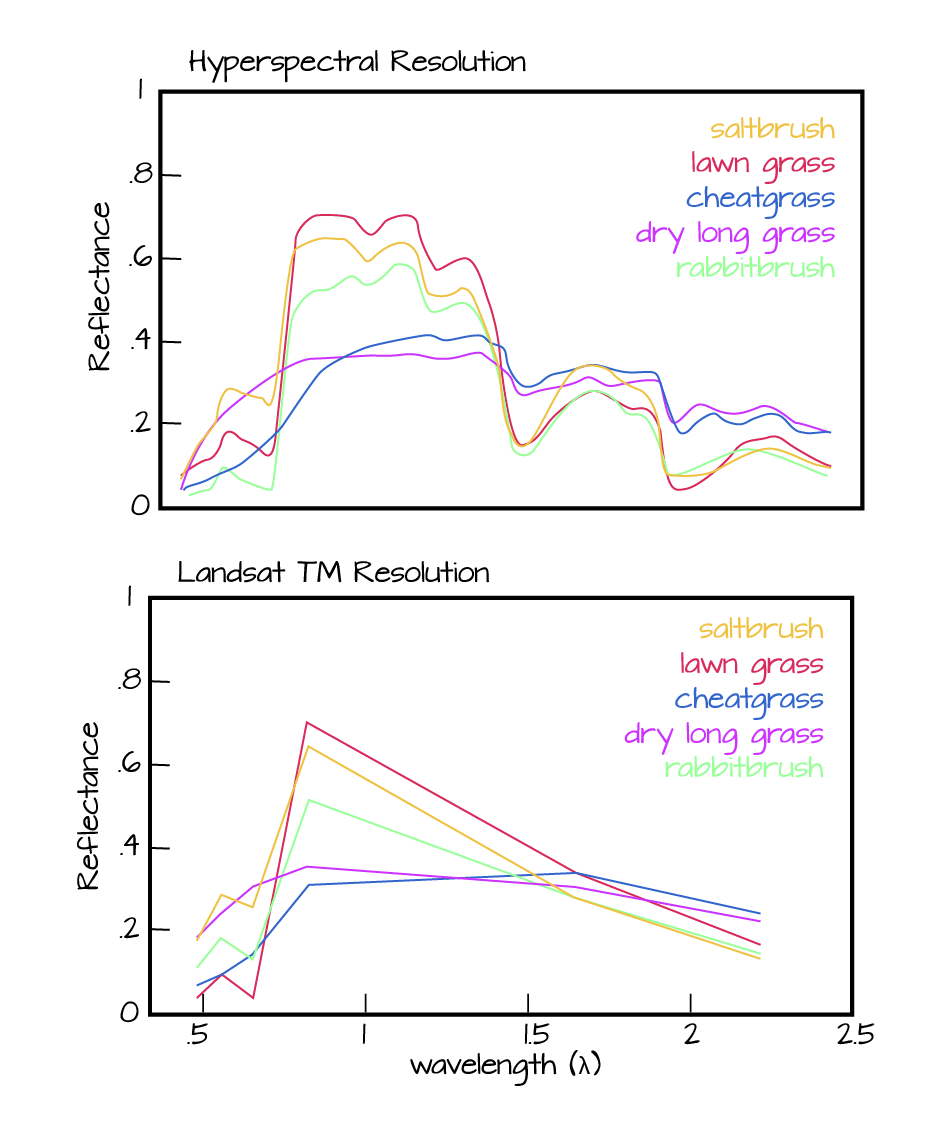

Next, we will explore a hyperspectral dataset, collected by the NEON Airborne Observation Platform (AOP) and saved in HDF5 format. Hyperspectral data are naturally hierarchical, as each pixel in the dataset contains reflectance values for hundreds of bands collected by the sensor. The NEON sensor (imaging spectrometer) collected data within 428 bands.

A few notes about hyperspectral imagery:

- An imaging spectrometer, which collects hyperspectral imagery, records light energy reflected off objects on the earth's surface.

- The data are inherently spatial. Each "pixel" in the image is located spatially and represents an area of ground on the earth.

- Similar to an Red, Green, Blue (RGB) camera, an imaging spectrometer records reflected light energy. Each pixel will contain several hundred bands worth of reflectance data.

Read more about hyperspectral remote sensing data:

Let's open some hyperspectral imagery stored in HDF5 format to see what the file structure can like for a different type of data.

Open the file. Notice that it is structured differently. This file is composed of 3 datasets:

- Reflectance,

- fwhm, and

- wavelength.

It also contains some text information called "map info". Finally it contains a group called spatial info.

Let's first look at the metadata stored in the spatialinfo group. This group contains all of the spatial information that a GIS program would need to project the data spatially.

Next, double click on the wavelength dataset. Note that this dataset contains the central wavelength value for each band in the dataset.

Finally, click on the reflectance dataset. Note that in the metadata for the dataset that the structure of the dataset is 426 x 501 x 477 (wavelength, line, sample), as indicated in the metadata. Right click on the reflectance dataset and select Open As. Click Image in the "display as" settings on the left hand side of the popup.

In this case, the image data are in the second and third dimensions of this dataset. However, HDFView will default to selecting the first and second dimensions

Let’s tell the HDFViewer to use the second and third dimensions to view the image:

- Under

height, make suredim 1is selected. - Under

width, make suredim 2is selected.

Notice an image preview appears on the left of the pop-up window. Click OK to open the image. You may have to play with the brightness and contrast settings in the viewer to see the data properly.

Explore the spectral dataset in the HDFViewer taking note of the metadata and data stored within the file.

Introduction to HDF5 Files in R

Authors: Leah A. Wasser

Last Updated: Nov 23, 2020

Learning Objectives

After completing this tutorial, you will be able to:

- Understand how HDF5 files can be created and structured in R using the rhdf5 libraries.

- Understand the three key HDF5 elements: the HDF5 file itself, groups, and datasets.

- Understand how to add and read attributes from an HDF5 file.

Things You’ll Need To Complete This Tutorial

To complete this tutorial you will need the most current version of R and, preferably, RStudio loaded on your computer.

R Libraries to Install:

- rhdf5: The rhdf5 package is hosted on Bioconductor not CRAN. Directions for installation are in the first code chunk.

More on Packages in R – Adapted from Software Carpentry.

Data to Download

We will use the file below in the optional challenge activity at the end of this tutorial.

NEON Teaching Data Subset: Field Site Spatial Data

These remote sensing data files provide information on the vegetation at the National Ecological Observatory Network's San Joaquin Experimental Range and Soaproot Saddle field sites. The entire dataset can be accessed by request from the NEON Data Portal.

Download DatasetSet Working Directory: This lesson assumes that you have set your working directory to the location of the downloaded and unzipped data subsets.

An overview of setting the working directory in R can be found here.

R Script & Challenge Code: NEON data lessons often contain challenges that reinforce learned skills. If available, the code for challenge solutions is found in the downloadable R script of the entire lesson, available in the footer of each lesson page.

Additional Resources

Consider reviewing the documentation for the RHDF5 package.

About HDF5

The HDF5 file can store large, heterogeneous datasets that include metadata. It

also supports efficient data slicing, or extraction of particular subsets of a

dataset which means that you don't have to read large files read into the

computers memory / RAM in their entirety in order work with them.

HDF5 in R

To access HDF5 files in R, we will use the rhdf5 library which is part of

the Bioconductor

suite of R libraries. It might also be useful to install

the

free HDF5 viewer

which will allow you to explore the contents of an HDF5 file using a graphic interface.

More about working with HDFview and a hands-on activity here.

First, let's get R setup. We will use the rhdf5 library. To access HDF5 files in R, we will use the rhdf5 library which is part of the Bioconductor suite of R packages. As of May 2020 this package was not yet on CRAN.

# Install rhdf5 package (only need to run if not already installed)

#install.packages("BiocManager")

#BiocManager::install("rhdf5")

# Call the R HDF5 Library

library("rhdf5")

# set working directory to ensure R can find the file we wish to import and where

# we want to save our files

wd <- "~/Git/data/" #This will depend on your local environment

setwd(wd)

Read more about the

rhdf5 package here.

Create an HDF5 File in R

Now, let's create a basic H5 file with one group and one dataset in it.

# Create hdf5 file

h5createFile("vegData.h5")

## [1] TRUE

# create a group called aNEONSite within the H5 file

h5createGroup("vegData.h5", "aNEONSite")

## [1] TRUE

# view the structure of the h5 we've created

h5ls("vegData.h5")

## group name otype dclass dim

## 0 / aNEONSite H5I_GROUP

Next, let's create some dummy data to add to our H5 file.

# create some sample, numeric data

a <- rnorm(n=40, m=1, sd=1)

someData <- matrix(a,nrow=20,ncol=2)

Write the sample data to the H5 file.

# add some sample data to the H5 file located in the aNEONSite group

# we'll call the dataset "temperature"

h5write(someData, file = "vegData.h5", name="aNEONSite/temperature")

# let's check out the H5 structure again

h5ls("vegData.h5")

## group name otype dclass dim

## 0 / aNEONSite H5I_GROUP

## 1 /aNEONSite temperature H5I_DATASET FLOAT 20 x 2

View a "dump" of the entire HDF5 file. NOTE: use this command with CAUTION if you are working with larger datasets!

# we can look at everything too

# but be cautious using this command!

h5dump("vegData.h5")

## $aNEONSite

## $aNEONSite$temperature

## [,1] [,2]

## [1,] 0.33155432 2.4054446

## [2,] 1.14305151 1.3329978

## [3,] -0.57253964 0.5915846

## [4,] 2.82950139 0.4669748

## [5,] 0.47549005 1.5871517

## [6,] -0.04144519 1.9470377

## [7,] 0.63300177 1.9532294

## [8,] -0.08666231 0.6942748

## [9,] -0.90739256 3.7809783

## [10,] 1.84223101 1.3364965

## [11,] 2.04727590 1.8736805

## [12,] 0.33825921 3.4941913

## [13,] 1.80738042 0.5766373

## [14,] 1.26130759 2.2307994

## [15,] 0.52882731 1.6021497

## [16,] 1.59861449 0.8514808

## [17,] 1.42037674 1.0989390

## [18,] -0.65366487 2.5783750

## [19,] 1.74865593 1.6069304

## [20,] -0.38986048 -1.9471878

# Close the file. This is good practice.

H5close()

Add Metadata (attributes)

Let's add some metadata (called attributes in HDF5 land) to our dummy temperature data. First, open up the file.

# open the file, create a class

fid <- H5Fopen("vegData.h5")

# open up the dataset to add attributes to, as a class

did <- H5Dopen(fid, "aNEONSite/temperature")

# Provide the NAME and the ATTR (what the attribute says) for the attribute.

h5writeAttribute(did, attr="Here is a description of the data",

name="Description")

h5writeAttribute(did, attr="Meters",

name="Units")

Now we can add some attributes to the file.

# let's add some attributes to the group

did2 <- H5Gopen(fid, "aNEONSite/")

h5writeAttribute(did2, attr="San Joaquin Experimental Range",

name="SiteName")

h5writeAttribute(did2, attr="Southern California",

name="Location")

# close the files, groups and the dataset when you're done writing to them!

H5Dclose(did)

H5Gclose(did2)

H5Fclose(fid)

Working with an HDF5 File in R

Now that we've created our H5 file, let's use it! First, let's have a look at the attributes of the dataset and group in the file.

# look at the attributes of the precip_data dataset

h5readAttributes(file = "vegData.h5",

name = "aNEONSite/temperature")

## $Description

## [1] "Here is a description of the data"

##

## $Units

## [1] "Meters"

# look at the attributes of the aNEONsite group

h5readAttributes(file = "vegData.h5",

name = "aNEONSite")

## $Location

## [1] "Southern California"

##

## $SiteName

## [1] "San Joaquin Experimental Range"

# let's grab some data from the H5 file

testSubset <- h5read(file = "vegData.h5",

name = "aNEONSite/temperature")

testSubset2 <- h5read(file = "vegData.h5",

name = "aNEONSite/temperature",

index=list(NULL,1))

H5close()

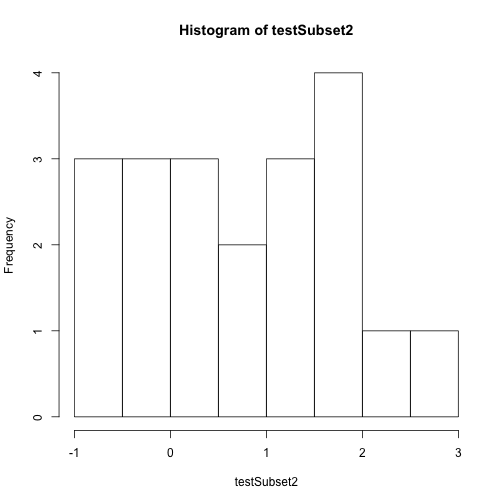

Once we've extracted data from our H5 file, we can work with it in R.

# create a quick plot of the data

hist(testSubset2)

Time to practice the skills you've learned. Open up the D17_2013_SJER_vegStr.csv in R.

- Create a new HDF5 file called

vegStructure. - Add a group in your HDF5 file called

SJER. - Add the veg structure data to that folder.

- Add some attributes the SJER group and to the data.

- Now, repeat the above with the D17_2013_SOAP_vegStr csv.

- Name your second group SOAP

Hint: read.csv() is a good way to read in .csv files.

Get Lesson Code

Create HDF5 Files in R Using Loops

Authors: Ted Hart, Leah Wasser, Adapted From Software Carpentry

Last Updated: Apr 8, 2021

Learning Objectives

After completing this tutorial, you will be able to:

- Understand how HDF5 files can be created and structured in R using the rhfd libraries.

- Understand the three key HDF5 elements: * the HDF5 file itself, * groups,and * datasets.

Things You’ll Need To Complete This Tutorial

To complete this tutorial you will need the most current version of R and, preferably, RStudio loaded on your computer.

R Libraries to Install:

- rhdf5

More on Packages in R – Adapted from Software Carpentry.

Recommended Background

Consider reviewing the documentation for the RHDF5 package.

A Brief Review - About HDF5

The HDF5 file can store large, heterogeneous datasets that include metadata. It

also supports efficient data slicing, or extraction of particular subsets of a

dataset which means that you don't have to read large files read into the computers

memory/RAM in their entirety in order work with them. This saves a lot of time

when working with with HDF5 data in R. When HDF5 files contain spatial data,

they can also be read directly into GIS programs such as QGiS.

The HDF5 format is a self-contained directory structure. We can compare this

structure to the folders and files located on your computer. However, in HDF5

files "directories" are called groups and files are called datasets. The

HDF5 element itself is a file. Each element in an HDF5 file can have metadata

attached to it making HDF5 files "self-describing".

HDF5 in R

To access HDF5 files in R, you need base HDF5 libraries installed on your computer. It might also be useful to install the free HDF5 viewer which will allow you to explore the contents of an HDF5 file visually using a graphic interface. More about working with HDFview and a hands-on activity here.

The package we'll be using is rhdf5 which is part of the

Bioconductor suite of

R packages. If you haven't installed this package before, you can use the first

two lines of code below to install the package. Then use the library command to

call the library("rhdf5") library.

# Install rhdf5 package (only need to run if not already installed)

#install.packages("BiocManager")

#BiocManager::install("rhdf5")

# Call the R HDF5 Library

library("rhdf5")

# set working directory to ensure R can find the file we wish to import and where

# we want to save our files

wd <- "~/Documents/data/" #This will depend on your local environment

setwd(wd)

Read more about the rhdf5 package here.

Create an HDF5 File in R

Let's start by outlining the structure of the file that we want to create. We'll build a file called "sensorData.h5", that will hold data for a set of sensors at three different locations. Each sensor takes three replicates of two different measurements, every minute.

HDF5 allows us to organize and store data in many ways. Therefore we need to decide what type of structure is ideally suited to our data before creating the HDF5 file. To structure the HDF5 file, we'll start at the file level. We will create a group for each sensor location. Within each location group, we will create two datasets containing temperature and precipitation data collected through time at each location.

So it will look something like this:

- HDF5 FILE (sensorData.H5)

- Location_One (Group)

- Temperature (Dataset)

- Precipitation (Dataset)

- Location_Two (Group)

- Temperature (Dataset)

- Precipitation (Dataset)

- Location_Three (Group)

- Temperature (Dataset)

- Precipitation (Dataset)

- Location_One (Group)

Let's first create the HDF5 file and call it "sensorData.h5". Next, we will add a group for each location to the file.

# create hdf5 file

h5createFile("sensorData.h5")

## file '/Users/olearyd/Git/data/sensorData.h5' already exists.

## [1] FALSE

# create group for location 1

h5createGroup("sensorData.h5", "location1")

## Can not create group. Object with name 'location1' already exists.

## [1] FALSE

The output is TRUE when the code properly runs.

Remember from the discussion above that we want to create three location groups. The process of creating nested groups can be simplified with loops and nested loops. While the for loop below might seem excessive for adding three groups, it will become increasingly more efficient as we need to add more groups to our file.

# Create loops that will populate 2 additional location "groups" in our HDF5 file

l1 <- c("location2","location3")

for(i in 1:length(l1)){

h5createGroup("sensorData.h5", l1[i])

}

## Can not create group. Object with name 'location2' already exists.

## Can not create group. Object with name 'location3' already exists.

Now let's view the structure of our HDF5 file. We'll use the h5ls() function to do this.

# View HDF5 File Structure

h5ls("sensorData.h5")

## group name otype dclass dim

## 0 / location1 H5I_GROUP

## 1 /location1 precip H5I_DATASET FLOAT 100 x 3

## 2 /location1 temp H5I_DATASET FLOAT 100 x 3

## 3 / location2 H5I_GROUP

## 4 /location2 precip H5I_DATASET FLOAT 100 x 3

## 5 /location2 temp H5I_DATASET FLOAT 100 x 3

## 6 / location3 H5I_GROUP

## 7 /location3 precip H5I_DATASET FLOAT 100 x 3

## 8 /location3 temp H5I_DATASET FLOAT 100 x 3

Our group structure that will contain location information is now set-up. However, it doesn't contain any data. Let's simulate some data pretending that each sensor took replicate measurements for 100 minutes. We'll add a 100 x 3 matrix that will be stored as a dataset in our HDF5 file. We'll populate this dataset with simulated data for each of our groups. We'll use loops to create these matrices and then paste them into each location group within the HDF5 file as datasets.

# Add datasets to each group

for(i in 1:3){

g <- paste("location",i,sep="")

# populate matrix with dummy data

# create precip dataset within each location group

h5write(

matrix(rnorm(300,2,1),

ncol=3,nrow=100),

file = "sensorData.h5",

paste(g,"precip",sep="/"))

#create temperature dataset within each location group

h5write(

matrix(rnorm(300,25,5),

ncol=3,nrow=100),

file = "sensorData.h5",

paste(g,"temp",sep="/"))

}

Understandig Complex Code

Sometimes you may run into code (like the above code) that combines multiple functions. It can be helpful to break the pieces of the code apart to understand their overall function.

Looking at the first h5write() chunck above, let's figure out what it is doing.

We can see easily that part of it is telling R to create a matrix (matrix())

that has 3 columns (ncol=3) and 100 rows (nrow=100). That is fairly straight

forward, but what about the rest?

Do the following to figure out what it's doing.

- Type

paste(g,"precip",sep="/")into the R console. What is the result? - Type

rnorm(300,2,1)into the console and see the result. - Type

ginto the console and take note of the result. - Type

help(norm)to understand what norm does.

And the output:

# 1

paste(g, "precip", sep="/")

## [1] "location3/precip"

# 2

rnorm(300,2,1)

## [1] 1.12160116 2.37206915 1.33052657 1.83329347 2.78469156 0.21951756 1.61651586

## [8] 3.22719604 2.13671092 1.63516541 1.54468880 2.32070535 3.14719285 2.00645027

## [15] 2.06133429 1.45723384 1.43104556 4.15926193 3.85002319 1.15748926 1.93503709

## [22] 1.86915962 1.36021215 2.30083715 2.21046449 -0.02372759 1.54690075 1.87020013

## [29] 0.97110571 1.65512027 2.17813648 1.56675913 2.64604422 2.79411476 -0.14945990

## [36] 2.41051127 2.69399882 1.74000170 1.73502637 1.19408520 1.52722823 2.46432354

## [43] 3.53782484 2.34243381 3.29194930 1.84151991 2.88567260 -0.13647135 3.00296224

## [50] 0.85778037 2.95060383 3.60112607 1.70182011 2.21919357 2.78131358 4.77298934

## [57] 2.05833348 1.83779216 2.69723103 2.99123600 3.50370367 1.94533631 2.27559399

## [64] 2.72276547 0.45838054 1.46426751 2.59186665 1.76153254 0.98961174 1.89289852

## [71] 0.82444265 2.87219678 1.50940120 0.48012497 1.98471512 0.41421129 2.63826815

## [78] 2.27517882 3.23534211 0.97091857 1.65001320 1.22312203 3.21926211 1.61710396

## [85] -0.12658234 1.35538608 1.29323483 2.63494212 2.45595986 1.60861243 0.24972178

## [92] 2.59648815 2.21869671 2.47519870 3.28893524 -0.14351078 2.93625547 2.14517733

## [99] 3.47478297 2.84619247 1.04448393 2.09413526 1.23898831 1.40311390 2.37146803

## [106] 2.19227829 1.90681329 2.26303161 2.99884507 1.74326040 2.11683327 1.81492036

## [113] 2.40780179 1.61687207 2.72739252 3.03310824 1.03081291 2.64457643 1.91816597

## [120] 1.08327451 1.78928748 2.76883928 1.84398295 1.90979931 1.74067337 1.12014125

## [127] 3.05520671 2.25953027 1.53057148 2.77369029 2.00694402 0.74746736 0.89913394

## [134] 1.92816438 2.35494925 0.67463920 3.05980940 2.71711642 0.78155942 3.72305006

## [141] 0.40562629 1.86261895 0.04233856 1.81609868 -0.17246583 1.08597066 0.97175222

## [148] 2.03687618 3.18872115 0.75895259 1.16660578 1.07536466 3.03581454 2.30605076

## [155] 3.01817174 1.88875411 0.99049222 1.93035897 2.62271411 2.59812578 2.26482981

## [162] 1.52726003 1.79621469 2.49783624 2.13485532 2.66744895 0.85641709 3.02384590

## [169] 3.67246567 2.60824228 1.52727352 2.27460561 2.80234576 4.11750031 2.61415438

## [176] 2.83139343 1.72584747 2.51950703 2.99546055 0.67057429 2.24606561 1.00670141

## [183] 1.06021336 2.17286945 1.95552109 2.07089743 2.68618677 0.56123176 3.28782653

## [190] 1.77083238 2.62322126 2.70762375 1.26714051 1.20204779 3.11801841 3.00480662

## [197] 2.60651464 2.67462075 1.35453017 -0.23423081 1.49772729 2.76268752 1.19530190

## [204] 3.10750811 2.52864738 2.26346764 1.83955448 2.49185616 1.91859037 3.22755405

## [211] 2.12798826 1.81429861 2.05723804 1.42868965 0.68103571 1.80038283 1.07693405

## [218] 2.43567001 2.64638560 3.11027077 2.46869879 0.40045458 3.33896319 2.58523866

## [225] 2.38463606 1.61439883 1.72548781 2.68705960 2.53407585 1.71551092 3.14097828

## [232] 3.66333494 2.81083499 3.18241488 -0.53755729 3.39492807 1.55778563 2.26579288

## [239] 2.97848166 0.58794567 1.84097123 3.34139313 1.98807665 2.80674310 2.19412789

## [246] 0.95367365 0.39471881 2.10241933 2.41306228 2.00773589 2.14253569 1.60134185

## [253] -0.65119903 -0.38269825 1.00581006 3.25421978 3.71441667 0.55648668 0.10765730

## [260] -0.47830919 1.84157184 2.30936354 2.37525467 -0.19275434 2.03402162 2.57293173

## [267] 2.63031994 1.15352865 0.90847785 1.28568361 1.84822183 2.98910787 2.63506781

## [274] 2.04770689 0.83206248 4.67738935 1.60943184 0.93227396 1.38921205 3.00806535

## [281] 0.96669941 1.50688173 2.81325862 0.76749654 0.63227293 1.27648973 2.81562324

## [288] 1.65374614 2.20174987 2.27493049 3.94629426 2.58820358 2.89080513 3.37907609

## [295] 0.91029648 3.03539190 0.61781396 0.05210651 1.99853728 0.86705444

# 3

g

## [1] "location3"

# 4

help(norm)

The rnorm function creates a set of random numbers that fall into a normal

distribution. You specify the mean and standard deviation of the dataset and R

does the rest. Notice in this loop we are creating a "precip" and a "temp" dataset

and pasting them into each location group (the loop iterates 3 times).

The h5write function is writing each matrix to a dataset in our HDF5 file

(sensorData.h5). It is looking for the following arguments: hrwrite(dataset,YourHdfFileName,LocationOfDatasetInH5File). Therefore, the code:

(matrix(rnorm(300,2,1),ncol=3,nrow=100),file = "sensorData.h5",paste(g,"precip",sep="/"))

tells R to add a random matrix of values to the sensorData HDF5 file within the

path called g. It also tells R to call the dataset within that group, "precip".

HDF5 File Structure

Next, let's check the file structure of the sensorData.h5 file. The h5ls()

command tells us what each element in the file is, group or dataset. It also

identifies the dimensions and types of data stored within the datasets in the

HDF5 file. In this case, the precipitation and temperature datasets are of type

'float' and of dimensions 100 x 3 (100 rows by 3 columns).

# List file structure

h5ls("sensorData.h5")

## group name otype dclass dim

## 0 / location1 H5I_GROUP

## 1 /location1 precip H5I_DATASET FLOAT 100 x 3

## 2 /location1 temp H5I_DATASET FLOAT 100 x 3

## 3 / location2 H5I_GROUP

## 4 /location2 precip H5I_DATASET FLOAT 100 x 3

## 5 /location2 temp H5I_DATASET FLOAT 100 x 3

## 6 / location3 H5I_GROUP

## 7 /location3 precip H5I_DATASET FLOAT 100 x 3

## 8 /location3 temp H5I_DATASET FLOAT 100 x 3

Data Types within HDF5

HDF5 files can hold mixed types of data. For example HDF5 files can store both strings and numbers in the same file. Each dataset in an HDF5 file can be its own type. For example a dataset can be composed of all integer values or it could be composed of all strings (characters). A group can contain a mix of string, and number based datasets. However a dataset can also be mixed within the dataset containing a combination of numbers and strings.

Add Metdata to HDF5 Files

Some metadata can be added to an HDF5 file in R by creating attributes in R

objects before adding them to the HDF5 file. Let's look at an example of how we

do this. We'll add the units of our data as an attribute of the R matrix before

adding it to the HDF5 file. Note that write.attributes = TRUE is needed when

you write to the HDF5 file, in order to add metadata to the dataset.

# Create matrix of "dummy" data

p1 <- matrix(rnorm(300,2,1),ncol=3,nrow=100)

# Add attribute to the matrix (units)

attr(p1,"units") <- "millimeters"

# Write the R matrix to the HDF5 file

h5write(p1,file = "sensorData.h5","location1/precip",write.attributes=T)

# Close the HDF5 file

H5close()

We close the file at the end once we are done with it. Otherwise, next time you open a HDF5 file, you will get a warning message similar to:

Warning message: In h5checktypeOrOpenLoc(file, readonly = TRUE) : An open HDF5 file handle exists. If the file has changed on disk meanwhile, the function may not work properly. Run 'H5close()' to close all open HDF5 object handles.

Reading Data from an HDF5 File

We just learned how to create an HDF5 file and write information to the file.

We use a different set of functions to read data from an HDF5 file. If

read.attributes is set to TRUE when we read the data, then we can also see

the metadata for the matrix. Furthermore, we can chose to read in a subset,

like the first 10 rows of data, rather than loading the entire dataset into R.

# Read in all data contained in the precipitation dataset

l1p1 <- h5read("sensorData.h5","location1/precip",

read.attributes=T)

# Read in first 10 lines of the data contained within the precipitation dataset

l1p1s <- h5read("sensorData.h5","location1/precip",

read.attributes = T,index = list(1:10,NULL))

Now you are ready to go onto the other tutorials in the series to explore more about HDF5 files.

Think about an application for HDF5 that you might have. Create a new HDF5 file that would support the data that you need to store.

Hint: You may be interested in these tutorials:

Get Lesson Code

Subsetting NEON HDF5 hyperspectral files to reduce file size

Authors: Donal O'Leary

Last Updated: Nov 26, 2020

In this tutorial, we will subset an existing HDF5 file containing NEON hyperspectral data. The purpose of this exercise is to generate a smaller file for use in subsequent analyses to reduce the file transfer time and processing power needed.

Learning Objectives

After completing this activity, you will be able to:

- Navigate an HDF5 file to identify the variables of interest.

- Generate a new HDF5 file from a subset of the existing dataset.

- Save the new HDF5 file for future use.

Things You’ll Need To Complete This Tutorial

To complete this tutorial you will need the most current version of R and, preferably, RStudio loaded on your computer.

R Libraries to Install:

-

rhdf5:

install.packages("BiocManager"),BiocManager::install("rhdf5") -

raster:

install.packages('raster')

More on Packages in R - Adapted from Software Carpentry.

Data to Download

The purpose of this tutorial is to reduce a large file (~652Mb) to a smaller

size. The download linked here is the original large file, and therefore you may

choose to skip this tutorial and download if you are on a slow internet connection

or have file size limitations on your device.

Download NEON Teaching Dataset: Full Tile Imaging Spectrometer Data - HDF5 (652Mb)

These hyperspectral remote sensing data provide information on the National Ecological Observatory Network's San Joaquin Exerimental Range field site.

These data were collected over the San Joaquin field site located in California (Domain 17) in March of 2019 and processed at NEON headquarters. This particular mosaic tile is named NEON_D17_SJER_DP3_257000_4112000_reflectance.h5. The entire dataset can be accessed by request from the NEON Data Portal.

Download DatasetSet Working Directory: This lesson assumes that you have set your working directory to the location of the downloaded and unzipped data subsets.

An overview of setting the working directory in R can be found here.

R Script & Challenge Code: NEON data lessons often contain challenges that reinforce learned skills. If available, the code for challenge solutions is found in the downloadable R script of the entire lesson, available in the footer of each lesson page.

Recommended Skills

For this tutorial, we recommend that you have some basic familiarity with the HDF5 file format, including knowing how to open HDF5 files (in Rstudio or HDF5Viewer) and how groups and metadata are structured. To brush up on these skills, we suggest that you work through the Introduction to Working with HDF5 Format in R series before moving on to this tutorial.

Why subset your dataset?

There are many reasons why you may wish to subset your HDF5 file. Primarily, HDF5 files may contain a large amount of information that is not necessary for your purposes. By subsetting the file, you can reduce file size, thereby shrinking your storage needs, shortening file transfer/download times, and reducing your processing time for analysis. In this example, we will take a full HDF5 file of NEON hyperspectral reflectance data from the San Joaquin Experimental Range (SJER) that has a file size of ~652 Mb and make a new HDF5 file with a reduced spatial extent, and a reduced spectral resolution, yielding a file of only ~50.1 Mb. This reduction in file size will make it easier and faster to conduct your analysis and to share your data with others. We will then use this subsetted file in the Introduction to Hyperspectral Remote Sensing Data series.

If you find that downloading the full 652 Mb file takes too much time or storage space, you will find a link to this subsetted file at the top of each lesson in the Introduction to Hyperspectral Remote Sensing Data series.

Exploring the NEON hyperspectral HDF5 file structure

In order to determine what information that we want to put into our subset, we should first take a look at the full NEON hyperspectral HDF5 file structure to see what is included. To do so, we will load the required package for this tutorial (you can un-comment the middle two lines to load 'BiocManager' and 'rhdf5' if you don't already have it on your computer).

# Install rhdf5 package (only need to run if not already installed)

# install.packages("BiocManager")

# BiocManager::install("rhdf5")

# Load required packages

library(rhdf5)

Next, we define our working directory where we have saved the full HDF5

file of NEON hyperspectral reflectance data from the SJER site. Note,

the filepath to the working directory will depend on your local environment.

Then, we create a string (f) of the HDF5 filename and read its attributes.

# set working directory to ensure R can find the file we wish to import and where

# we want to save our files. Be sure to move the download into your working directory!

wd <- "~/Documents/data/" # This will depend on your local environment

setwd(wd)

# Make the name of our HDF5 file a variable

f_full <- paste0(wd,"NEON_D17_SJER_DP3_257000_4112000_reflectance.h5")

Next, let's take a look at the structure of the full NEON hyperspectral reflectance HDF5 file.

View(h5ls(f_full, all=T))

Wow, there is a lot of information in there! The majority of the groups contained within this file are Metadata, most of which are used for processing the raw observations into the reflectance product that we want to use. For demonstration and teaching purposes, we will not need this information. What we will need are things like the Coordinate_System information (so that we can georeference these data), the Wavelength dataset (so that we can match up each band with its appropriate wavelength in the electromagnetic spectrum), and of course the Reflectance_Data themselves. You can also see that each group and dataset has a number of associated attributes (in the 'num_attrs' column). We will want to copy over those attributes into the data subset as well. But first, we need to define each of the groups that we want to populate in our new HDF5 file.

Create new HDF5 file framework

In order to make a new subset HDF5 file, we first must create an empty file

with the appropriate name, then we will begin to fill in that file with the

essential data and attributes that we want to include. Note that the function

h5createFile() will not overwrite an existing file. Therefore, if you have

already created or downloaded this file, the function will throw an error!

Each function should return 'TRUE' if it runs correctly.

# First, create a name for the new file

f <- paste0(wd, "NEON_hyperspectral_tutorial_example_subset.h5")

# create hdf5 file

h5createFile(f)

## [1] TRUE

# Now we create the groups that we will use to organize our data

h5createGroup(f, "SJER/")

## [1] TRUE

h5createGroup(f, "SJER/Reflectance")

## [1] TRUE

h5createGroup(f, "SJER/Reflectance/Metadata")

## [1] TRUE

h5createGroup(f, "SJER/Reflectance/Metadata/Coordinate_System")

## [1] TRUE

h5createGroup(f, "SJER/Reflectance/Metadata/Spectral_Data")

## [1] TRUE

Adding group attributes

One of the great things about HDF5 files is that they can contain

data and attributes within the same group.

As explained within the Introduction to Working with HDF5 Format in R series,

attributes are a type of metadata that are associated with an HDF5 group or

dataset. There may be multiple attributes associated with each group and/or

dataset. Attributes come with a name and an associated array of information.

In this tutorial, we will read the existing attribute data from the full

hyperspectral tile using the h5readAttributes() function (which returns

a list of attributes), then we loop through those attributes and write

each attribute to its appropriate group using the h5writeAttribute() function.

First, we will do this for the low-level "SJER/Reflectance" group. In this step,

we are adding attributes to a group rather than a dataset. To do so, we must

first open a file and group interface using the H5Fopen and H5Gopen functions,

then we can use h5writeAttribute() to edit the group that we want to give

an attribute.

a <- h5readAttributes(f_full,"/SJER/Reflectance/")

fid <- H5Fopen(f)

g <- H5Gopen(fid, "SJER/Reflectance")

for(i in 1:length(names(a))){

h5writeAttribute(attr = a[[i]], h5obj=g, name=names(a[i]))

}

# It's always a good idea to close the file connection immidiately

# after finishing each step that leaves an open connection.

h5closeAll()

Next, we will loop through each of the datasets within the Coordinate_System group, and copy those (and their attributes, if present) from the full tile to our subset file. The Coordinate_System group contains many important pieces of information for geolocating our data, so we need to make sure that the subset file has that information.

# make a list of all groups within the full tile file

ls <- h5ls(f_full,all=T)

# make a list of all of the names within the Coordinate_System group

cg <- unique(ls[ls$group=="/SJER/Reflectance/Metadata/Coordinate_System",]$name)

# Loop through the list of datasets that we just made above

for(i in 1:length(cg)){

print(cg[i])

# Read the inividual dataset within the Coordinate_System group

d=h5read(f_full,paste0("/SJER/Reflectance/Metadata/Coordinate_System/",cg[i]))

# Read the associated attributes (if any)

a=h5readAttributes(f_full,paste0("/SJER/Reflectance/Metadata/Coordinate_System/",cg[i]))

# Assign the attributes (if any) to the dataset

attributes(d)=a

# Finally, write the dataset to the HDF5 file

h5write(obj=d,file=f,

name=paste0("/SJER/Reflectance/Metadata/Coordinate_System/",cg[i]),

write.attributes=T)

}

## [1] "Coordinate_System_String"

## [1] "EPSG Code"

## [1] "Map_Info"

## [1] "Proj4"

Spectral Subsetting

The goal of subsetting this dataset is to substantially reduce the file size, making it faster to download and process these data. While each AOP mosaic tile is not particularly large in terms of its spatial scale (1km by 1km at 1m resolution= 1,000,000 pixels, or about half as many pixels at shown on a standard 1080p computer screen), the 426 spectral bands available result in a fairly large file size. Therefore, we will reduce the spectral resolution of these data by selecting every fourth band in the dataset, which reduces the file size to 1/4 of the original!

Some circumstances demand the full spectral resolution file. For example, if you wanted to discern between the spectral signatures of similar minerals, or if you were conducting an analysis of the differences in the 'red edge' between plant functional types, you would want to use the full spectral resolution of the original hyperspectral dataset. Still, we can use far fewer bands for demonstration and teaching purposes, while still getting a good sense of what these hyperspectral data can do.

# First, we make our 'index', a list of number that will allow us to select every fourth band, using the "sequence" function seq()

idx <- seq(from = 1, to = 426, by = 4)

# We then use this index to select particular wavelengths from the full tile using the "index=" argument

wavelengths <- h5read(file = f_full,

name = "SJER/Reflectance/Metadata/Spectral_Data/Wavelength",

index = list(idx)

)

# As per above, we also need the wavelength attributes

wavelength.attributes <- h5readAttributes(file = f_full,

name = "SJER/Reflectance/Metadata/Spectral_Data/Wavelength")

attributes(wavelengths) <- wavelength.attributes

# Finally, write the subset of wavelengths and their attributes to the subset file

h5write(obj=wavelengths, file=f,

name="SJER/Reflectance/Metadata/Spectral_Data/Wavelength",

write.attributes=T)

Spatial Subsetting

Even after spectral subsetting, our file size would be greater than 100Mb.

herefore, we will also perform a spatial subsetting process to further

reduce our file size. Now, we need to figure out which part of the full image

that we want to extract for our subset. It takes a number of steps in order

to read in a band of data and plot the reflectance values - all of which are

thoroughly described in the Intro to Working with Hyperspectral Remote Sensing Data in HDF5 Format in R

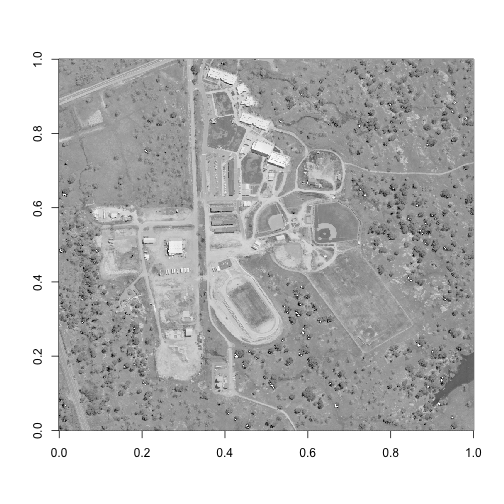

tutorial. For now, let's focus on the essentials for our problem at hand. In

order to explore the spatial qualities of this dataset, let's plot a single

band as an overview map to see what objects and land cover types are contained

within this mosaic tile. The Reflectance_Data dataset has three dimensions in

the order of bands, columns, rows. We want to extract a single band, and all

1,000 columns and rows, so we will feed those values into the index= argument

as a list. For this example, we will select the 58th band in the hyperspectral

dataset, which corresponds to a wavelength of 667nm, which is in the red end of

the visible electromagnetic spectrum. We will use NULL in the column and row

position to indicate that we want all of the columns and rows (we agree that

it is weird that NULL indicates "all" in this circumstance, but that is the

default behavior for this, and many other, functions).

# Extract or "slice" data for band 58 from the HDF5 file

b58 <- h5read(f_full,name = "SJER/Reflectance/Reflectance_Data",

index=list(58,NULL,NULL))

h5closeAll()

# convert from array to matrix

b58 <- b58[1,,]

# Make a plot to view this band

image(log(b58), col=grey(0:100/100))

As we can see here, this hyperspectral reflectance tile contains a school campus that is under construction. There are many different land cover types contained here, which makes it a great example! Perhaps the most unique feature shown is in the bottom right corner of this image, where we can see the tip of a small reservoir. Let's be sure to capture this feature in our spatial subset, as well as a few other land cover types (irrigated grass, trees, bare soil, and buildings).

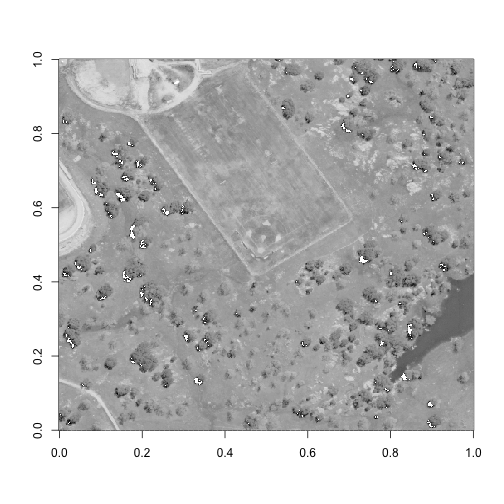

While raster images count their pixels from the top left corner, we are working

with a matrix, which counts its pixels from the bottom left corner. Therefore,

rows are counted from the bottom to the top, and columns are counted from the

left to the right. If we want to sample the bottom right quadrant of this image,

we need to select rows 1 through 500 (bottom half), and columns 501 through 1000

(right half). Note that, as above, the index= argument in h5read() requires

a list of three dimensions for this example - in the order of bands, columns,

rows.

subset_rows <- 1:500

subset_columns <- 501:1000

# Extract or "slice" data for band 44 from the HDF5 file

b58 <- h5read(f_full,name = "SJER/Reflectance/Reflectance_Data",

index=list(58,subset_columns,subset_rows))

h5closeAll()

# convert from array to matrix

b58 <- b58[1,,]

# Make a plot to view this band

image(log(b58), col=grey(0:100/100))

Perfect - now we have a spatial subset that includes all of the different land cover types that we are interested in investigating.

-

Pick your own area of interest for this spatial subset, and find the rows and columns that capture that area. Can you include some solar panels, as well as the water body?

-

Does it make a difference if you use a band from another part of the electromagnetic spectrum, such as the near-infrared? Hint: you can use the 'wavelengths' function above while removing the

index=argument to get the full list of band wavelengths.

Extracting a subset

Now that we have determined our ideal spectral and spatial subsets for our

analysis, we are ready to put both of those pieces of information into our

h5read() function to extract our example subset out of the full NEON

hyperspectral dataset. Here, we are taking every fourth band (using our idx

variabe), columns 501:1000 (the right half of the tile) and rows 1:500 (the

bottom half of the tile). The results in us extracting every fourth band of

the bottom-right quadrant of the mosaic tile.

# Read in reflectance data.

# Note the list that we feed into the index argument!

# This tells the h5read() function which bands, rows, and

# columns to read. This is ultimately how we reduce the file size.

hs <- h5read(file = f_full,

name = "SJER/Reflectance/Reflectance_Data",

index = list(idx, subset_columns, subset_rows)

)

As per the 'adding group attributes' section above, we will need to add the

attributes to the hyperspectral data (hs) before writing to the new HDF5

subset file (f). The hs variable already has one attribute, $dim, which

contains the actual dimensions of the hs array, and will be important for

writing the array to the f file later. We will want to combine this attribute

with all of the other Reflectance_Data group attributes from the original HDF5

file, f. However, some of the attributes will no longer be valid, such as the

Dimensions and Spatial_Extent_meters attributes, so we will need to overwrite

those before assigning these attributes to the hs variable to then write to

the f file.

# grab the '$dim' attribute - as this will be needed

# when writing the file at the bottom

hsd <- attributes(hs)

# We also need the attributes for the reflectance data.

ha <- h5readAttributes(file = f_full,

name = "SJER/Reflectance/Reflectance_Data")

# However, some of the attributes are not longer valid since

# we changed the spatial extend of this dataset. therefore,

# we will need to overwrite those with the correct values.

ha$Dimensions <- c(500,500,107) # Note that the HDF5 file saves dimensions in a different order than R reads them

ha$Spatial_Extent_meters[1] <- ha$Spatial_Extent_meters[1]+500

ha$Spatial_Extent_meters[3] <- ha$Spatial_Extent_meters[3]+500

attributes(hs) <- c(hsd,ha)

# View the combined attributes to ensure they are correct

attributes(hs)

## $dim

## [1] 107 500 500

##

## $Cloud_conditions

## [1] "For cloud conditions information see Weather Quality Index dataset."

##

## $Cloud_type

## [1] "Cloud type may have been selected from multiple flight trajectories."

##

## $Data_Ignore_Value

## [1] -9999

##

## $Description

## [1] "Atmospherically corrected reflectance."

##

## $Dimension_Labels

## [1] "Line, Sample, Wavelength"

##

## $Dimensions

## [1] 500 500 107

##

## $Interleave

## [1] "BSQ"

##

## $Scale_Factor

## [1] 10000

##

## $Spatial_Extent_meters

## [1] 257500 258000 4112500 4113000

##

## $Spatial_Resolution_X_Y

## [1] 1 1

##

## $Units

## [1] "Unitless."

##

## $Units_Valid_range

## [1] 0 10000

# Finally, write the hyperspectral data, plus attributes,

# to our new file 'f'.

h5write(obj=hs, file=f,

name="SJER/Reflectance/Reflectance_Data",

write.attributes=T)

## You created a large dataset with compression and chunking.

## The chunk size is equal to the dataset dimensions.

## If you want to read subsets of the dataset, you should testsmaller chunk sizes to improve read times.

# It's always a good idea to close the HDF5 file connection

# before moving on.

h5closeAll()

That's it! We just created a subset of the original HDF5 file, and included the most essential groups and metadata for exploratory analysis. You may consider adding other information, such as the weather quality indicator, when subsetting datasets for your own purposes.

If you want to take a look at the subset that you just made, run the h5ls() function:

View(h5ls(f, all=T))